Every extra 50 ms on your Solana RPC path leaks edge from your trading bots. Treat RPC like a generic API gateway—and you turn your trading system into an expensive science project.

If you run HFT or quant strategies, you already think of FIX gateways, market data feeds, and colocation as strategic dependencies. Solana RPC belongs in the same category. The problem is that most comparison frameworks for RPC are built for generic DeFi apps, not for trading bots.

This article gives you a trading-focused framework to evaluate Solana RPC providers across five pillars:

- Performance and uptime

- Price and cost structure

- Security

- Flexibility and customization

- Support and incident handling

The goal is simple: help a TradFi or HFT leader entering Solana choose infra that protects P&L, reduces tail risk, and does not trap you in a commodity endpoint.

Solana and trading bots: what is different from REST APIs

If you come from equities or futures, Solana feels familiar in that it delivers high message rates and tight latency budgets. The main differences that matter for RPC selection:

- Parallel execution

Solana executes transactions in parallel across accounts. Popular markets and programs become hotspots. When your strategy lives on these hotspots, tiny RPC delays or inconsistent views of account state hurt more.

- Account-centric accessInstead of “symbols,” you subscribe to accounts and programs. Reads and writes that touch the same accounts compete for execution. The quality of your RPC node’s access to the validator network and its internal indexing directly affects your view of this state.

- Read-heavy vs write-heavy bots

- Read-heavy are signal generation, cross-exchange arbitrage watchers, risk and funding systems.

- Write-heavy are market makers, sniping and liquidation bots, and high-frequency arbitrage.

Read-heavy flows stress bandwidth, indexing, and subscription performance. Write-heavy flows stress:

- SWQoS and priority fees;

- Routing to well-connected validators;

- How your provider handles congestion and transaction queueing.

During Solana congestion windows in 2022–2024, public dashboards such as Solana Status and Solana Beach showed spikes in TPS, block production delays, and partial outages. For trading desks, these windows are when spreads widen and opportunity is highest. RPC behavior during those events, not “normal days,” should drive your evaluation.

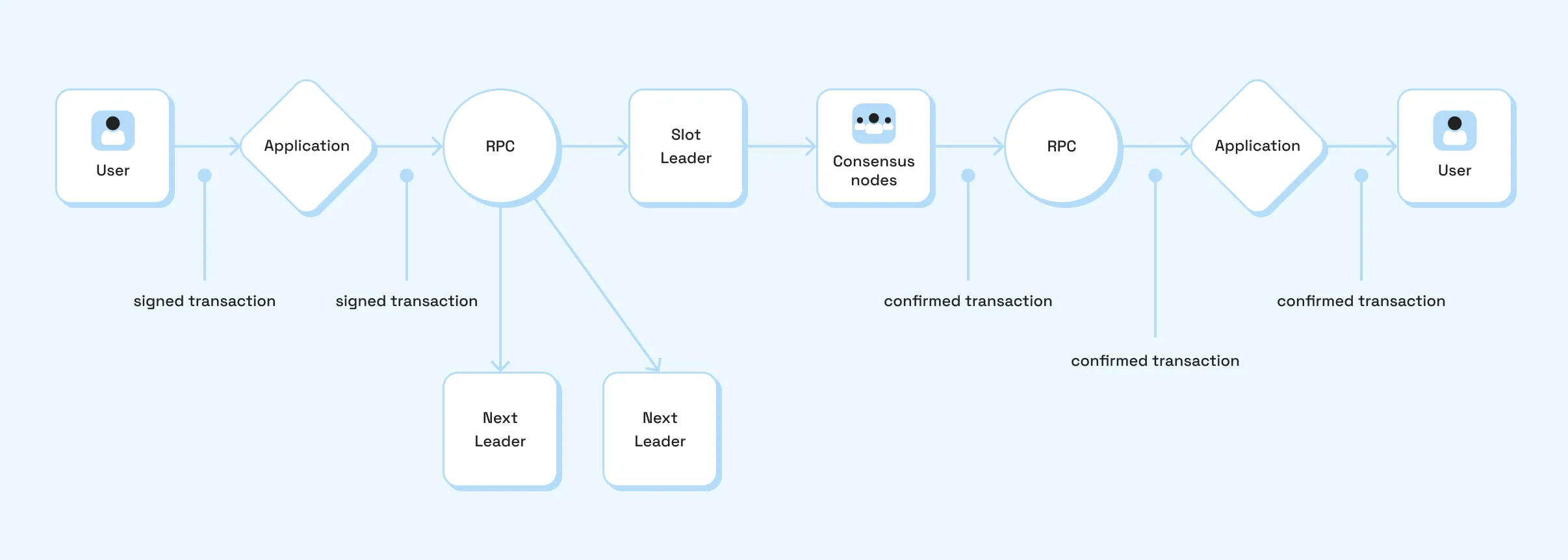

Pillar 1: Performance and uptime

For trading, “works fine” is not a metric. You need definitions that match how your bots behave.

A useful minimal set of metrics

- Latency from your infra to RPC

Measure p50, p95, and p99 round-trip for calls such as getLatestBlockhash, getBlock, getProgramAccounts, and getAccountInfo. Include websocket subscription delay: time from slot or transaction creation to message arrival at your client.

- Jitter and tail behavior

Track how often latency is 2–3x typical values, especially in volatile periods, and how tails react when Solana TPS rises.

- Provider-side uptime and degradation patterns

Separate Solana-layer events from provider-specific ones. Look for history during major 2022–2025 incidents visible on Solana Status.

How dedicated Solana HFT nodes change the picture

Dedicated nodes are more valuable to HFT trading bots than public RPC nodes. We can’t say for all the providers, but here are the characteristics of our Solana HFT dedicated node:

- An ultra-fast dedicated Solana node powered by:

- Yellowstone Geyser plugins for streaming raw blockchain data to your system

- Jito Shredstream for direct access to Solana’s block production pipeline before transactions are visible on standard RPC endpoints

- A no-RPS-limits setup where you push traffic as far as the hardware can handle, on bare metal servers with up to 1.5 TB DDR5 RAM in the Pro tier.

The performance of the RPC Fast dedicated node for Solana HFT is as follows:

- Sub-4 ms latency via a dedicated node in the nearest location with optimized configs and custom RPC tuning;

- Propagation times under 100 ms and a 99 percent transaction landing rate when used with the SOL Trading API by bloXroute and SWQoS;

- 382 ms faster transactions than traditional RPC setups for the combined path.

Key questions for providers

Pillar 2: Price and cost structure

At the C-level, RPC cost is often misread as “price per million requests.” For trading bots, you care about:

- How pricing behaves under short, violent bursts;

- How much capacity and predictability you get for that spend;

- How many internal engineering hours you burn around the RPC’s quirks.

The tradeoff is clear:

- Any shared per-request plan: lower entry bill, higher risk of rate limiting at the worst moments;

- Dedicated node: higher fixed cost, but predictable behavior and no generic RPS throttling.

Key questions for providers

Pillar 3: Security

Your concerns here are isolation, access control, and how much of your strategy behavior leaks into shared infrastructure or logs. Public RPC endpoints are often:

- Multi-tenant;

- Lightly protected;

- Monitored using broad logging with payload details.

That is fine for hobby projects. It is not fine if you run a meaningful project.

Dedicated HFT nodes like those described on the RPC Fast page are deployed as single-tenant machines with private networking, optional IP whitelisting, and firewalling, plus monitoring and incident response. This pattern is now standard across serious providers:

- A dedicated node per client or per strategy group;

- Private or VPC-connected endpoints from chosen regions (EU, US, Asia);

- Configurable logging and telemetry with tighter access controls.

Questions for providers

All in all, your evaluation should cut through marketing to specifics:

- How can we restrict access?

Confirm support for IP allowlists, VPN or VPC peering, and TLS-only connections. - How are keys handled?

Look for clear answers on API key generation, rotation, and segregation between environments. - How are logs and traces treated?

Ask where logs live, how long they are retained, who has access, and whether payloads with transaction details are stored.

Pillar 4: Flexibility and customization

This pillar is where the bill and the edge start to fight each other.

On one side, you want a generic, shared RPC that looks cheap and simple. On the other, your more advanced strategies need infra that bends around them: custom data paths, different retry logic, and priority routing. Every extra knob has a cost, and not all desks are far enough along to justify it.

At a high level:

- Shared, generic RPC

You pay less and get a fixed feature set. You accept that indexes, caching, and failover logic reflect the average dApp, not your trading book. Good for early experiments, not for serious size.

- Semi-dedicated or dedicated trading nodes

You pay more, but you decide which programs and accounts matter, which plugins run, and how aggressive the retries and backpressure are. You get more predictable behavior at the cost of higher base spend and tighter coupling between your team and the provider.

- Heavy customization

You pay the most, not only in invoices but in your own engineering time. Custom Geyser plugins, program-specific indexers, and tailored transaction paths require design, observability, and change control. Done well, this supports new strategies that generic RPC can block. Done poorly, it becomes another fragile system to babysit.

The dilemma for a C-level lead is simple: at what point does the value of a new strategy or better execution justify locking into more complex, customized infra.

You should expect any serious provider, including RPC Fast, to sit somewhere past the “generic shared RPC” line and offer at least:

- Dedicated or semi-dedicated Solana nodes where you control what runs on the box;

- Geyser-based streaming, so you shape how raw blockchain data lands in your stack;

- Hooks for custom indexers and data sinks for specific wallets, tokens, or programs;

- Paths for priority routing and SWQoS-aware behavior when you grow into it.

Your question that should be announced inside your team as a buyer is not “who offers more features” but:

Questions for providers

Pillar 5: Support and incident handling

You will only understand a provider’s support when Solana behaves badly. A good Solana HFT provider:

- Designs for redundancy and failover

For example, RPC Fast setups may include dual bare-metal nodes with unified endpoints and pre-synced spares, enabling ~15-minute node swaps or scale-outs for production clusters. Those patterns matter when a single machine or region has issues.

- Gives you direct access to engineers

You want real-time channels like Telegram or Slack with the ops team, not a ticket queue. If there’s someone who can really solve the urgent matter quickly, it’s only the top-experienced expert.

- Shares clear incident stories

Any provider should have a story that describes the “before” state, including gaps and downtime on public or in-house endpoints, latency spikes under load, and single-endpoint risk—and describe how they manage to make the happy end for ever “after.”

To evaluate providers, walk through at least one real incident scenario together:

- Solana sees a network issue that slows block production and increases transaction failures.

- Your bots see higher

getProgramAccountslatency and more failed writes.

Ask:

- What did you detect and when?

- How quickly did clients hear from you and through which channels?

- What configuration or routing changes did you apply to protect trading workloads?

- How did you validate that there was no data loss on your streaming side?

Questions for providers

Vendor comparison checklist

You can use this table as a quick scorecard in vendor meetings.

You do not need every vendor to tick every box. You do need consistency: the provider’s story on performance, design, and support must match what an HFT team expects when something breaks during a regime shift.

Where to go from here

Choosing a Solana RPC provider for trading bots is not a procurement exercise. It is a decision about how much edge and incident risk you want to carry in production.

Use the five mentioned pillars as your lens, then test the claims with your own workloads from your own regions.

If you want a second opinion on your current Solana RPC setup, book a free 1-hour technical review of your architecture with the RPC Fast team. Our engineer can walk through your trading strategies, infra layout, and failure modes, and help you decide whether a dedicated Solana HFT node fits your needs or if a different approach makes more sense.

.svg)

.svg)

.svg)