BNB Chain is accelerating, and faster finality is already live. As sub‑second blocks are on the roadmap, your RPC layer now faces tighter cache windows and higher write pressure. Done right, your users see smoother swaps and lower gas. Done wrong, you see spikes of 5xx and stuck frontends.

Data growth compounds the challenge. State size on BSC rose roughly 50 percent from late 2023 to mid‑2025, driven by higher block cadence and activity. The core team is shipping incremental snapshots and storage refinements to ease operations, but capacity planning still belongs on your side of the fence.

➣ Leave 20 percent headroom and standardize snapshot rotations.

Client strategy matters more this year. Geth remains a solid choice for full nodes. Erigon is the pragmatic path for archive with a lower disk footprint and a separate RPC daemon that holds up under load. Reth is entering the picture to reduce single‑client risk and push staged sync performance on BSC and opBNB.

➣ Your goal is resilience without complexity creep.

What do these new requirements mean for you on the technical side? It means that one public full node behind a load balancer no longer cuts it. Outcomes you should aim for:

- Sub‑100 ms p95 on core reads during peaks.

- Four-nines availability across zones.

- Recovery from a failed full node in under 30 minutes using warm snapshots.

- Archive queries that stay responsive without soaking disk IO.

Your infrastructure is calling for renovation if your backlog includes chain reorgs, token launches, or analytics backfills. The rest of this guide gives you the exact picks: hardware tiers by use case, sync and snapshot strategies, Kubernetes reference blueprints, metrics and alerts tied to real failure modes. All cross‑linked to BNB docs and roadmap above.

2026 RPC operator checklist

BNB RPC basics you need to get right

Your execution client drives latency, disk usage, and how you sync. Pick it first. Then size hardware, storage, and your snapshot plan around it. Geth is the standard for full and validator roles. Erigon is the efficient path for the archive. But let’s start from the basics:

Node types and when to use them

Pick the node type based on your query depth and reliability goals. Then right‑size hardware and snapshots.

- Fast node. Lowest resource for the latest world state. Suitable for app RPC.

- Full node. General RPC backend that validates state. Standard for most dApps and wallets.

- Archive. Full historical state for deep analytics and traces. Use Erigon to cut the footprint and accelerate sync.

- Validator. Consensus duties. Use sentry topology and keep the validator RPC private.

Client choices in 2026: Geth vs Erigon for BNB

Most production teams combine both. An Erigon archive node stores full historical data for analytics and compliance queries, while one or more Geth fast nodes serve live chain traffic at the tip. A proxy layer routes requests by block height—new blocks to Geth, older ranges to Erigon—balancing freshness with storage efficiency and keeping critical RPCs below 100 ms.

Why Erigon for the archive nodes

The archive node is where costs and reliability diverge.

- Staged sync. Pipeline reduces initial sync time from weeks to days on BSC. Docs reference about three days from scratch for the Erigon archive.

- Separate rpcdaemon. Decouples RPC serving from execution for predictable latency under load.

- Smaller disk. Historical note from operators and vendors shows Geth archive in the tens of TB range vs Erigon in single‑digit TB. Example: NodeReal cited ~50 TB for Geth archive vs ~6 TB for Erigon in earlier BSC eras.

If you need validator‑compatible semantics or Geth‑specific tooling, keep Geth for fast/full. Put the archive on Erigon to reduce storage and ops toil.

Disk I/O truths

- NVMe is preferable for consistency at the chain tip. HDDs and networked-only volumes fall behind during bursts.

- Plan headroom for growth. BSC state grew ~50 percent from Dec 2023 to Jun 2025 per core team notes on incremental snapshots.

Risk callouts

Small mistakes create outages. Lock down these two first.

➣ Do not expose admin RPC over the internet.

Admin and HTTP JSON‑RPC on validator or full nodes invite takeover and fund loss. Keep validator RPC private. Place public RPC on separate hosts with WAF and rate limits.

➣ Snap sync vs from the genesis block.

Use official snapshots. Syncing from genesis on BSC takes a long time and fails often on under‑specced disks. Snapshots cut time to readiness from weeks to hours.

Hardware and storage profiles that hold up under load

This guidance separates teams that run stable RPC from teams that fight fires. The difference is in resource planning and storage architecture. Pick the right class of machine and disks. Pair it with snapshots and client choices that match your workload. That mix becomes a competitive edge under sub‑second blocks and fast finality.

Baseline requirements and cloud options

➣ Disclaimer: Prices vary by region and term. Use these as directional on‑demand references and validate in your target regions.

Notes

- GCP requires attaching a Local SSD for NVMe‑class performance. Price is region‑specific. Example rate $0.08 per GB per month for N2 in the Iowa central US region.

- AWS i4i provides local NVMe instance storage, which is suitable for Erigon data directories. Validate the persistence model and backup plan.

- Azure Lsv3 includes local NVMe and strong uncached IOPS, a good fit for archive workloads.

Sync strategies that won’t waste weeks

Snapshot first for Geth full nodes

Starting from a fresh snapshot cuts sync time from weeks to hours. Your team gets a healthy node online fast and reduces the risk of stalls and rework. Explore more: BNB Full Node guide and BSC snapshots.

- Pull the latest trusted chaindata snapshot;

- Restore to your datadir and start Geth;

- Verify head, peers, and RPC health before going live.

Note: It takes hours from snapshot restore to usable RPC, but fully stable, warmed caches, validated state, and indexed internals need more time, depending heavily on hardware/snapshot quality.

Snap sync, then steady full sync

Snap sync catches up quickly, then full sync keeps you consistent at the chain head. This balances speed to readiness with day two reliability. Explore more: Sync modes in Full Node docs.

- Start with snap sync for catch-up;

- Let it auto switch to full once at head;

- Monitor head lag and finalize your cutover window.

Use fast mode only for the catch‑up window

Disabling trie verification speeds catch-up when you trust the snapshot source. Use it to reach head faster, then return to safer defaults. Turn on fast mode during initial sync. Return to normal verification once synced.

➣ Explore more: Fast Node guide

Alert on head lag and RPC latency

Your business cares about fresh data and fast responses. Persistent block lag or rising P95 latency hurts swaps, pricing, and UX. Explore more: BNB monitoring metrics and alerts.

- Alert when head lag exceeds your SLO for more than a few minutes;

- Alert on P95 RPC latency breaches;

- Fail over reads before users feel it.

Prune mode minimal for short history with traces

Minimal prune keeps recent days of history and supports trace methods with lower storage. Good for apps that query recent activity at scale. Explore more: Archive and prune options and bsc‑erigon README.

- Set minimal prune for hot paths and recent traces;

- Keep archive profiles only where needed;

- Align retention with product query patterns.

Make mgasps your early warning

During catch-up, mgas per second shows if the node processes blocks at the expected rate. Low mgasps points to disk or peer quality, not app bugs. Track mgasps during sync. If it drops, check NVMe throughput and refresh peers.

➣ Best Practices and syncing speed

Keep RPC private by default

Open RPC invites abuse and noisy neighbors. Private RPC with allow lists preserves performance and limits the attack surface. Put RPC behind a firewall or proxy. Disable admin APIs. Expose only required ports to trusted clients.

Plan realistic time budgets

Teams lose weeks by underestimating sync. Set expectations and book the window to avoid fire drills. Geth from snapshot reaches production inside a day on solid NVMe and links. The Erigon archive requires approximately three days on robust hardware, plus time for backfills.

➣ Full Node by snapshot and Erigon archive guidance

Operate with light storage and regular pruning

Large stores slow down reads and compaction. Pruning on a schedule helps maintain stable performance and reduces storage waste. Schedule pruning during low traffic. Keep a warm standby to swap during maintenance. That’s all about avoiding the UX pitfalls, preventing them at the infra design level.

➣ Node maintenance and pruning

Production RPC: from single node to HA, low‑latency clusters

Single node hardening

Lock down port 8545 to your private network only. Never expose the admin API to the public internet—this is your mantra now. Attackers constantly scan for open RPC ports; one misconfiguration can lead to full node compromise or drained keys. Use firewalls or VPC security groups to isolate access.

Allocate around one‑third of RAM as a cache for Geth. For a 64 GB machine, set --cache 20000. This ensures the node keeps hot state data in memory instead of hitting disk I/O on every call. Geth and BNB Chain recommend these ratios for optimal performance and stability.

Run your node as a systemd service and configure it for a graceful shutdown. This avoids chain corruption during restarts or maintenance and prevents multi-day resync cycles that occur when Geth crashes abruptly. Follow BNB Chain’s service templates for managed restart and monitoring.

Track real service level objectives—not vanity uptime. Your internal SLOs should be at least 99.9% uptime, median response time (p50) under 100 ms, and 99th percentile (p99) under 300 ms for critical calls. Set up alerts when RPC latency exceeds 100 ms to investigate potential issues before they escalate into incidents.

Horizontal scale pattern

A production setup uses multiple full nodes managed by Kubernetes, sitting behind an L4 or L7 load balancer. Add health checks, DNS‑based geo routing, and sticky sessions for WebSocket (WS) users. This architecture makes the RPC layer resilient to single node failures and network partitioning.

Add a caching tier in front to reduce node CPU usage and improve latency. The Dysnix JSON-RPC Caching Proxy provides method-aware caching, load balancing, and per-key limits. It supports WebSocket pass-through, allowing clients to maintain persistent channels while benefiting from cache hits.

Subscription stability mini-playbook (WS) under faster blocks

This is where many “RPC is down” incidents actually originate in high-traffic dApps.

Predictive autoscaling for spikes

Traditional HPA scales too late for traffic spikes. Predictive scaling starts new nodes minutes or hours before an event—like a token launch or NFT mint—so they’re warm and balanced before load hits. This protects your p99 latency and user experience under bursty conditions.

PredictKube uses AI models that look up to six hours ahead, combining metrics like RPS, CPU trends, and business signals. It’s a certified KEDA scaler that continuously tunes cluster size, keeping high‑load RPCs stable without overprovisioning.

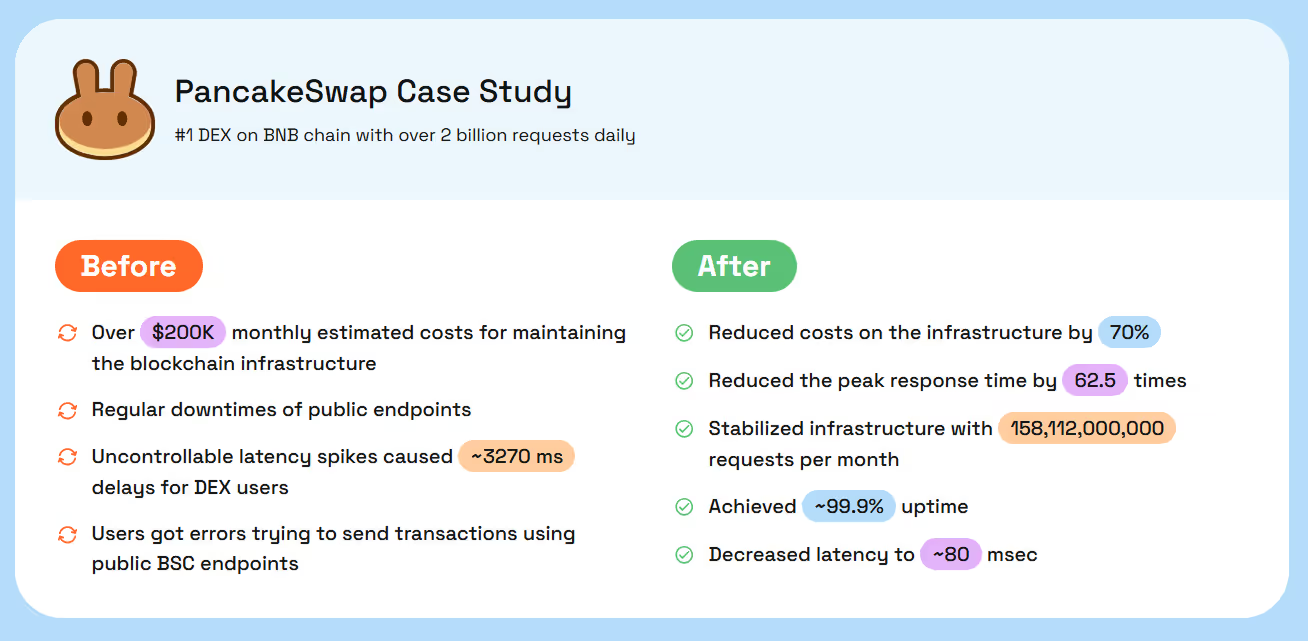

Micro‑case from RPC Fast and Dysnix engineers' experience: PancakeSwap on BNB

When PancakeSwap started hitting 100,000 RPS, reactive autoscaling and standard caching were no longer enough. With PredictKube and smart JSON‑RPC caching in place, they achieved pre‑scaled readiness before major events, cutting response times by 62.5×. Latency dropped to around 80 ms, while infrastructure costs fell 30–70% depending on load patterns.

Security, reliability, and cost controls

Tail latency and outages trigger failed swaps, liquidations, and lost orderflow. 2025 updates improved auth, spam resistance, fast recovery, and storage economics. And here’s what has changed:

TL;DR

- Secure the edge, isolate bursts, and split read/write paths.

- Predict scale from mempool signals, not CPU graphs.

- Tier storage and hydrate archives on demand.

Features that matter

- ABI-aware WAF with per-method quotas: stops calldata spam without throttling healthy reads, so premium flows keep their SLOs during spikes.

- Predictive autoscaling from mempool and head drift: scales before load hits, keeping P99 under control where MEV bots care.

- Snapshot blue/green rollouts: upgrades with rollbacks in minutes, not maintenance windows.

Common challenges → solutions

- Burst writes starve reads → separate pools with priority lanes and backpressure to protect user UX.

- Reorgs break clients → sticky reads plus reorg-aware caches to avoid transient mismatches.

- Archive bloat → cold storage with on-demand hydration to reduce costs while maintaining full history accessibility.

Tooling and ecosystem to consider

- API edge: NGINX, Envoy, Cloudflare with Workers for method-aware throttling;

- Caching: Redis with Lua cost accounting, in-proc LRU on the node;

- Indexing: Elastic, ClickHouse, or Parquet-backed data lake for logs/traces;

- Observability: Prometheus, Grafana, OpenTelemetry, eBPF for syscall and I/O profiling;

- Predictive scaling: Dysnix PredictKube for spike pre-scaling and head-lag-aware admission control;

- Mempool routing: Partner with bloXroute for specific flows, if relevant.

Finality APIs and chain-specific RPCs you must account for

BSC is EVM-compatible, but it is not Ethereum. The consensus mechanism, finality model, and feature set diverge in ways that affect your RPC layer. If you treat BSC as "just another Geth fork," you will miss critical integration points and capacity planning requirements.

What is different on BSC

BSC uses Parlia consensus, a Proof of Staked Authority model with fast finality and validator rotation. Ethereum uses Gasper (LMD-GHOST + Casper FFG). These differences create unique RPC requirements and failure modes that do not exist on the Ethereum mainnet.

BSC-specific RPC methods you must support

BSC extends the standard Geth JSON-RPC API with methods for finality, governance, and validator operations. If your RPC infrastructure does not expose these, BSC-native dApps and tools will break.

- Finality API: Query finalized block height and status. Required for applications that need strong consistency guarantees (bridges, custody, compliance). See BSC Finality API documentation.

- Blob API: Retrieve and verify blob data stored on the execution layer. Required for rollups and data availability applications using EIP-4844. See BSC Blob API documentation.

- Validator and staking methods: Query validator set, staking status, and governance proposals. Required for staking dashboards, validator monitoring, and governance interfaces.

Capacity planning for BSC-specific features

- Blob storage grows faster than state. If you run archive nodes, plan for blob data accumulation. Blobs are stored on the execution layer, not pruned by default, and require separate retention policies. Tier cold blob data to cheaper storage (S3, GCS) and keep recent blobs on NVMe for fast retrieval.

- Finality queries add read load. Applications that poll finality status (bridges, exchanges, custodians) generate steady read traffic. Cache finalized block height with a TTL of 1-2 blocks to reduce node load.

- Faster block times increase write and cache churn. At 3-second block times, your cache TTL and invalidation logic must be tighter. At sub-second block times (roadmap), you will need to rethink caching entirely: shorter TTLs, more aggressive prefetching, and probabilistic cache warming based on mempool signals.

Why this matters in 2026

As BSC moves toward sub-second block times and broader adoption of blobs for rollups and data availability, the gap between "Ethereum-compatible" and "BSC-native" widens. Your RPC infrastructure must account for these differences upfront, or you will spend 2026 firefighting performance issues and compatibility bugs that could have been designed out.

Build vs buy: when to run your own vs managed, or combine both

If custom RPC or data locality drives revenue and you have SRE coverage, run your own.

If latency, SLA, and focus on product speed drive value, use managed with dedicated nodes.

Run your own when

- You need nonstandard RPC, deep custom tracing, archive pruning rules, or bespoke mempool listeners.

- Data residency or customer contracts require strict locality and private peering.

- You have SRE depth for 24/7 on‑call, incident SLAs, kernel tuning, and backlog grooming.

- You plan to run experiments that managed providers refuse, like modified Geth/BSC forks or custom telemetry.

Why it’s hard

- BNB full and archive nodes are IO‑bound and network‑sensitive. Hot storage, peering, and snapshots decide tail latency.

- Upgrades and reindex events drive multi‑hour risk windows without prescaling and traffic draining.

- Cost optics hide in ENI/egress, SSD churn, and snapshot storage.

Use managed plus dedicated nodes when

- Time‑to‑market, predictable p95 under 100 ms, and bounded tail p99 matter more than bare‑metal control.

- You want SLA, geo routing, and cost flexibility without adding headcount.

- You need burst capacity during listings, airdrops, or MEV‑heavy windows without cold‑start penalties.

What “managed + dedicated” should include

- Dedicated BSC nodes per tenant with read/write isolation and pinned versions.

- Global anycast or DNS steering, region affinity, and JWT for project‑level auth.

- Hot snapshots with rotation, rapid restore, and prewarmed caches for the state trie.

- Tracing toggles, debug APIs on gated endpoints, and per‑method rate limits.

Self‑hosted cluster with embedded ops

- Dedicated, geo‑distributed nodes behind multi‑region load balancing.

- Read pool with caching, snapshot rotation, automated health checks, and auto‑heal.

- JWT auth, per‑token quotas, and method‑aware routing.

- Blue‑green upgrades with drain, replay tests, and rollbacks.

- Metrics you care about: p50/p95/p99, error buckets by method, reorg sensitivity, and snapshot freshness SLO.

Method-aware SLOs and error budgets

Generic latency targets miss the operational reality: different RPC methods have different failure modes, cost profiles, and user impact. A failed eth_sendRawTransaction loses a trade. A slow eth_getLogs query delays a dashboard refresh. Your SLOs should reflect that.

Operational notes

- Budget your error allowance by method. Allocate more of your error budget to read-heavy and trace methods. Protect write-path and read-hot methods with stricter limits and faster failover.

- Measure propagation, not just response time. For eth_sendRawTransaction, track whether the transaction appears in the mempool of peer nodes within 500 ms. A fast 200 OK that never propagates is a silent failure.

- Tie alerts to user impact. Alert when p95 latency for read-hot methods exceeds 100 ms for more than 3 minutes, or when write-path success rate drops below 99.5% over a 5-minute window. Ignore isolated spikes; focus on sustained degradation.

- Enforce range limits at the edge. Public endpoints and shared RPC should reject eth_getLogs queries spanning more than 2,000-5,000 blocks. Archive and premium tiers can allow larger ranges with queueing.

This framework turns "RPC is slow" into actionable signals. You know which pool is saturated, which method is failing, and where to add capacity or caching.

Providers and prices

We have conducted a small price research for you, but please note that obtaining a quote from providers for your specific requirements may alter the price you see here.

TL;DR

- RPC Fast offers BNB RPC node clusters with dedicated and geo-distributed setups, providing the best price-quality balance. These clusters are positioned for low-latency reads and higher RPS, with custom requirements also covered.

- GetBlock sits at the premium end, offering unlimited CU/RPS and only three regions, while Allnodes is the most transparent in terms of pricing but charges a steep premium for archive storage.

- Chainstack’s metered “compute + storage” model has a baseline cost of around $360/month, but the total cost depends on the SSD size for BSC; Enterprise offers unlimited requests on dedicated servers.

- QuickNode supports BSC dedicated clusters with fixed enterprise pricing; however, you will need to engage with sales to obtain the necessary numbers and configuration details.

- Infura and Alchemy do not publicly offer dedicated BSC nodes, so enterprise buyers should treat them as “custom if available” rather than a ready option.

Further reading

Thank you for getting this far with us through updates on the BNB chain and selecting nodes! Let’s continue the investigation together:

- BNB Chain Full Node guide;

- BNB Chain Node best practices and hardware;

- BNB Chain Archive with Erigon;

- NodeReal on BSC Erigon footprint;

- bsc-erigon repository and ops notes;

- PredictKube AI autoscaler and KEDA integration;

- PancakeSwap micro‑case and PredictKube case hub;

- JSON-RPC Caching Proxy.

.svg)

.svg)

.svg)