Recently, we hosted a private session with OVHcloud and ~20 fast‑growing startups. Among attendees, we were pleased to welcome payment services, L1/cross-chain projects, compliance & security innovators, and AI-powered teams. The talk was splendid and brought a mass of insights for all the participants.

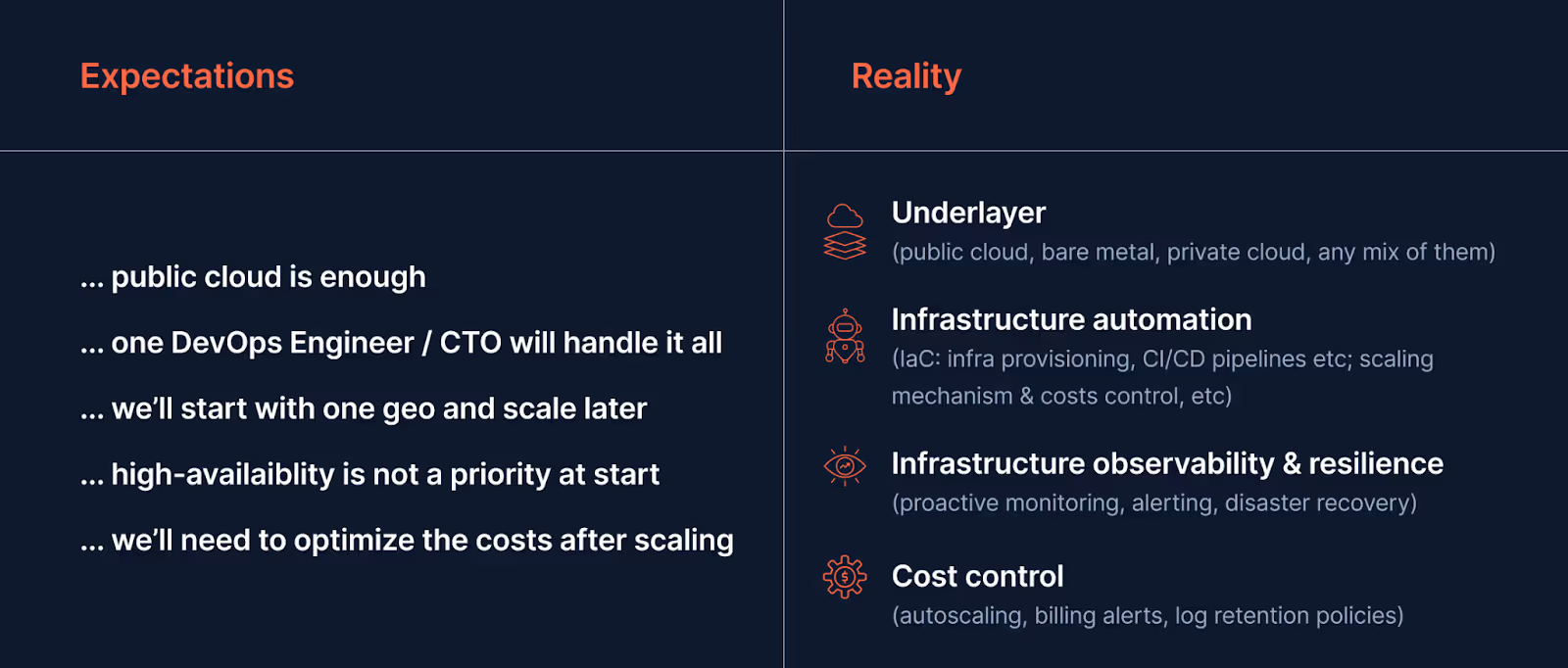

These Web3 teams face the same hurdles in 2025: spikes that break autoscalers, fraud and compliance latency that blocks revenue, GPU scarcity and spend creep, and bridges or validators that stall under load. Market dynamics amplify this. Chain upgrades and L2 expansions compress finality budgets. Regulators tighten audit expectations. AI-driven products raise user latency expectations to sub‑100 ms.

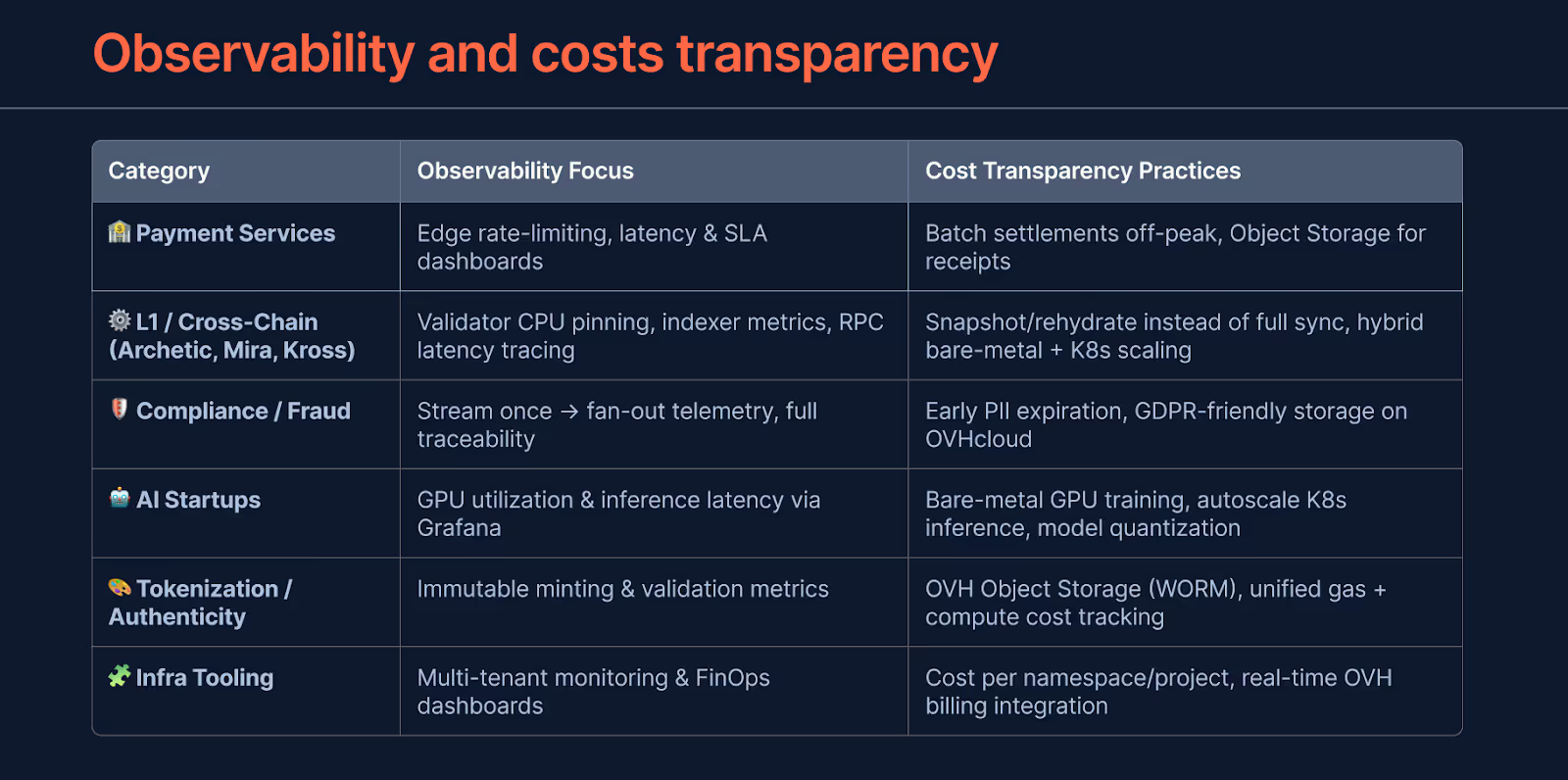

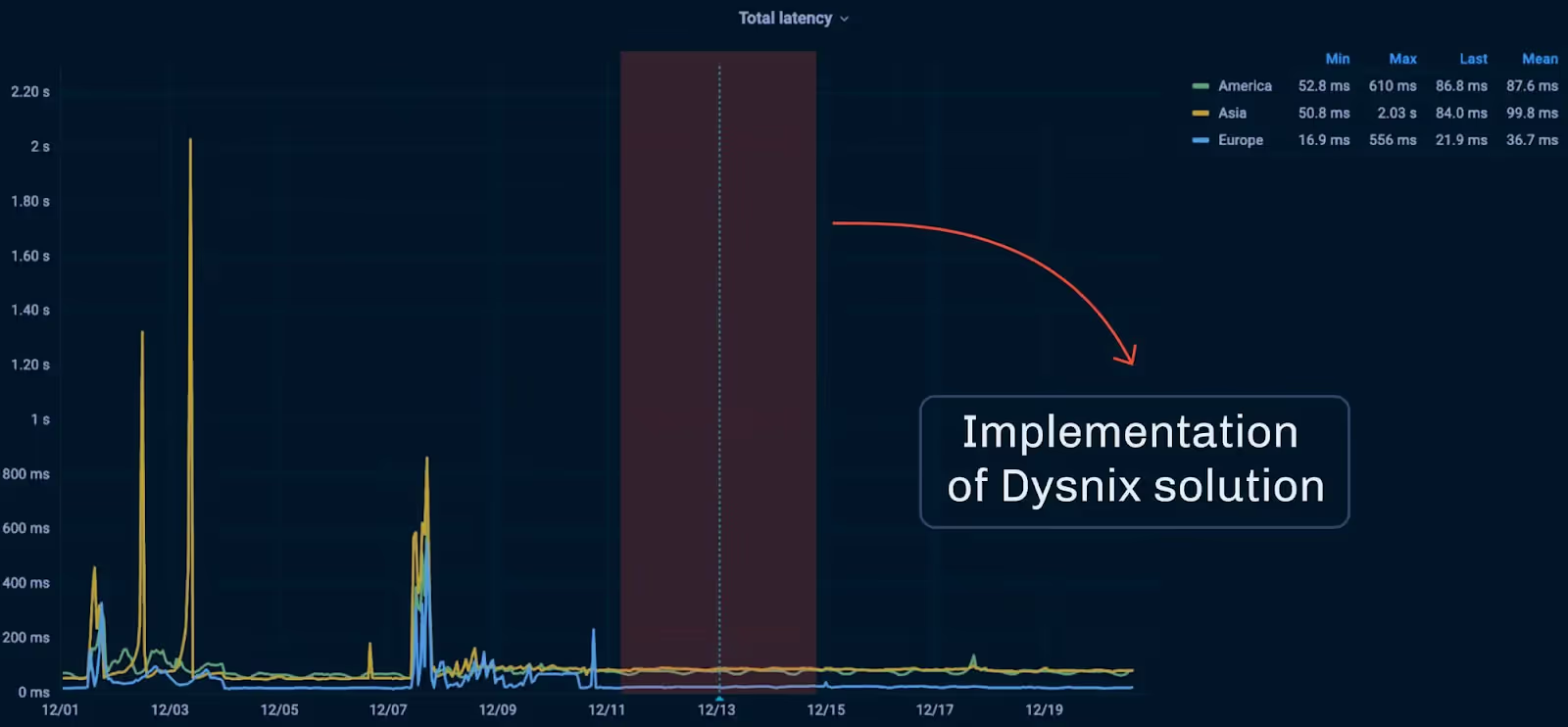

In a nutshell, we compared what breaks first in Web3 stacks and what protects revenue. The winners had a simple pattern. Keep critical flows fast and isolated. Scale the parts that face customers. Set clear targets for latency, uptime, and recovery. Measure cost from the very beginning, and where it matters the most.

The shortest conclusions of our expertise-sharing session are as follows:

- Slow systems lose revenue. Target sub‑100 ms for key user actions.

- Keep two regions live. Rehearse failover and rollback.

- Run critical paths on dedicated servers. Scale edges on Kubernetes.

- Track latency and errors next to cost per 1k requests.

- OVHcloud is strong in the EU with predictable bandwidth. AWS EC2 Bare Metal and Hetzner are solid alternatives.

- Use the readiness checklist before launch or scale.

Read further to dive into the details of our workshop. We remade it into a practical guide your team can execute without changing your product roadmap.

Problem-solving infra design: Where money leaks and how to stop it

We move straight to the point while discussing infrastructure, and that’s why the flaws of infrastructure stand behind the challenges of our clients. That’s the problem that’s easily cured with a list of simple migration or upgrading actions:

Other examples of numbers you might like to track while improving your infrastructure:

- RPC read path p95 under 50 ms with private networking and prescaling;

- 99.99% uptime across validators and payment gateways with dual‑region failover;

- 30–50% cloud spend reduction from tiering, autosleep, and waste cleanup in 90 days;

- Finality lag held within 1 block during chain events using snapshot/rehydrate.

Tie alerts to budgets. If latency, errors, or lag cross the line, autoscale or shed load before users feel it.

Most startups think of scaling. The best—do it right

You scale faster and with less effort when you remove waste and keep p95 stable. Measure cost next to SLOs and act before users feel it.

- Split steady vs bursty. Steady hot paths on Bare Metal. Bursty on K8s with prescaling.

- Tier storage by access. NVMe for hot DBs/indexers, Block for warm, Object for cold with lifecycle.

- Measure cost next to SLOs. $/1k requests and per‑model spend with p95 and error rate. Throttle unprofitable traffic.

- Predict peaks and prewarm. Scale before drops, listings, and chain upgrades.

- Kill waste fast. Reclaim idle GPUs, zombie PVCs, orphan nodes. Autosleep non‑prod.

- Cut egress and cross‑talk. Prefer private paths, CDN assets, compress/batch, co‑locate compute and data.

Scalability and performance on one page

These are the levers that keep p99 flat while QPS grows.

DevSecOps and compliance that protect revenue

Security and compliance are load‑bearing. Treat them as engineering constraints with budgets and gates, not checklists on a slide. And maybe our advice here is debatable, and there are other ways of implementing DevSecOps, but the best practices are as follows:

- Policy as code in CI/CD. Block non‑compliant infra/app changes. SBOMs and signature verification at admission.

- Zero trust on private networks. Mutual TLS, short‑lived creds, JIT access. HSM/KMS for key ops. Remote signers for validators.

- Immutable deployments and provenance. Sigstore signing. Only trusted images/models in prod.

- Real‑time risk and observability. Traces across payment→gateway→chain. Watch p95/p99, error rate, finality lag, and queue age.

- Resilience by design. Active‑active, idempotent queues, deterministic rollback. Chaos drills measured against RTO/RPO.

- Privacy automation. Tokenization, field‑level encryption, retention policies, and audit evidence export.

Attach business metrics to drills. Track revenue at risk per minute during failovers. This keeps investment focused.

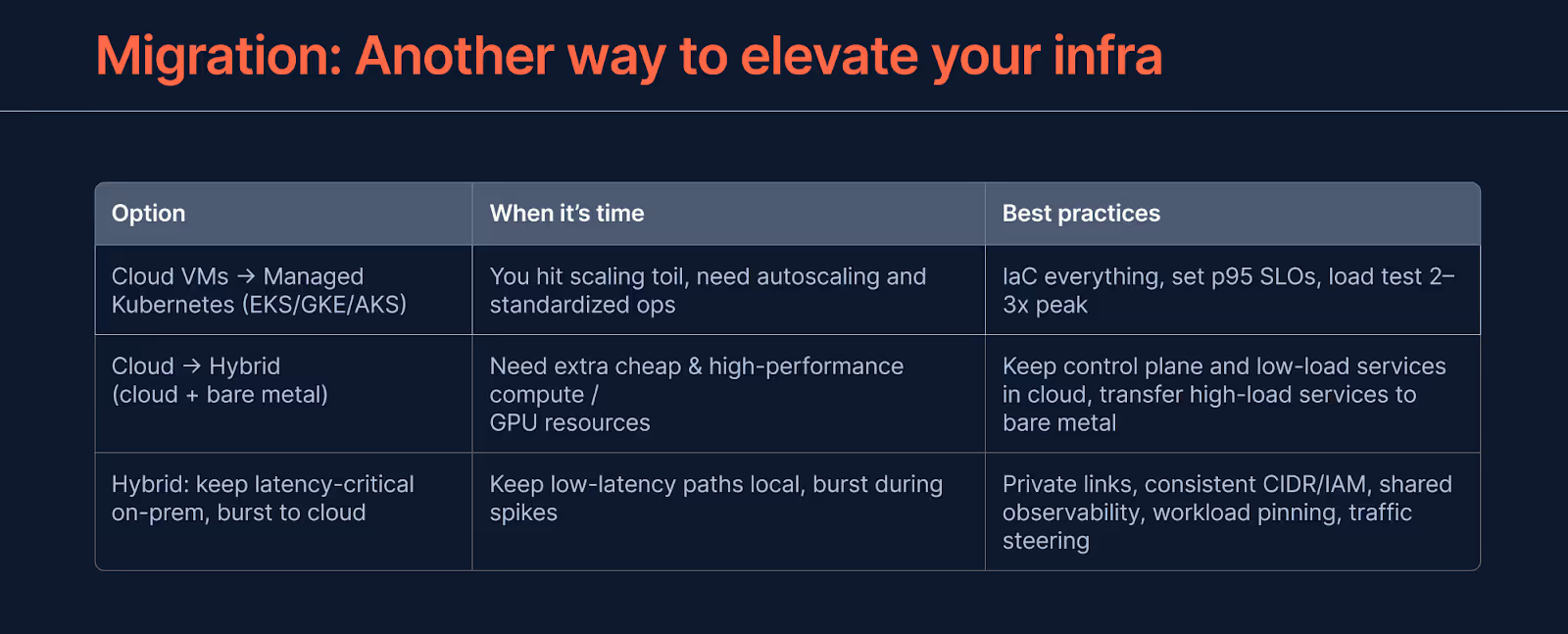

Infrastructure issue? Try migration

Stuck performance and rising incidents often trace back to the wrong platform mix, noisy neighbors, or one-region designs. Migration fixes root causes when tuning hits a wall. Treat it as an engineered, low-downtime program with clear business goals: sub-100 ms on critical flows, 99.99% uptime, and lower $/1k requests.

Best practices

- In-place hardening: Move hot state to dedicated NVMe, add private networking, and front public edges with WAF and rate limits. Fastest path to stability.

- Partial replatform: Keep data where it is, shift elastic services to Managed Kubernetes with prescaling and blue-green deploys. Cuts latency spikes without data risk.

- Phased provider shift: For EOL or cost issues, drain by service. Stand up parallel stacks, mirror traffic, then switch with health gates. Use snapshots and deterministic rollback.

- Cross-region activation: Add a second region as active-active with idempotent queues and tested failover. Removes single-region fragility.

Best‑practice setups on OVHcloud, with brief alternatives

Disclaimer: Provider inventories and prices change. Validate region latency, GPU stock, and egress before committing.

- OVHcloud: strong EU latency and bandwidth economics with vRack isolation.

- AWS EC2 Bare Metal: broadest regional reach and enterprise controls; watch egress costs.

- Hetzner: cost-efficient dedicated NVMe; validate DDoS posture and jitter for hot paths.

One checklist before launch or scale

Use this as your go/no‑go gate. If an item is red, delay the launch.

Core for all teams

Add‑ons by project type

- Payments: Auth p95 < 100 ms. Ledger RPO ≤ 1 min. RTO ≤ 5 min. Per‑merchant/BIN/IP budgets. Idempotency keys. Reconciliation and chargeback runbooks.

- L1 and cross‑chain: Validators on tuned dedicated servers. Remote signer/HSM. Two or more relayers. Rollback by block height. Lag and missed‑block alerts.

- Compliance/fraud: Event→decision p95 ≤ 200 ms. Backpressure and safe fallback rules. 2‑of‑N intel quorum. Immutable logs per jurisdiction. DPIA if required.

- AI startups: Training on dedicated GPU. Inference on K8s GPU pools with QoS/MIG. Per‑model SLO and spend guardrails. Shadow/canary deploys with drift alerts.

The last reminder

The game changers we offered to you are pragmatic. Dedicated NVMe servers for critical state stabilize p99 and reduce failure blast radius. Kubernetes gives elastic scale for public edges without idle burn. Private networking contains jitter and abuse. Fast snapshots with deterministic rollback cut recovery from hours to minutes. For AI, GPU pool isolation and per‑model cost/SLO guardrails keep demos sharp and margins healthy.

None of these ships by accident. It needs a senior team that thinks in SLOs, treats security as code, rehearses failover, and reads kernel and network signals when incidents hit. Most startups lack that bench during hypergrowth, which is where specialists pay back fast.

RPC Fast and Dysnix bring that bench. We place hot paths on the right metal, keep edges elastic, wire budgets to alerts, and leave you with clear runbooks.

Map your gaps to revenue, trust, and regulatory exposure. Then pick the cures your team can deliver in weeks. Make these cures concrete with budgets, e.g., auth p95 under 100 ms.

Map your gaps to revenue, trust, and regulatory exposure. Then pick the cures your team can deliver in weeks. Make these cures concrete with budgets, e.g., auth p95 under 100 ms.

.svg)

.svg)

.svg)