The success of your DeFi app or platform always depends on the infrastructure choices you make, fitting the tiny dimensions of compliance, security, and other restrictions. For us as DevOps experts, the most fascinating aspect of DeFi infrastructures is that you can’t make it “in a vacuum.”

Stay profitable and competitive, yet meet all the compliance requirements, scale according to the users’ demands, and never experience any security breach or data loss—these are the dreams of all DeFi execs.

The right moves now can unlock new scale, security, and efficiency. This playbook distills advanced strategies, shortcuts, and real-world lessons for C-levels building the next wave of DeFi.

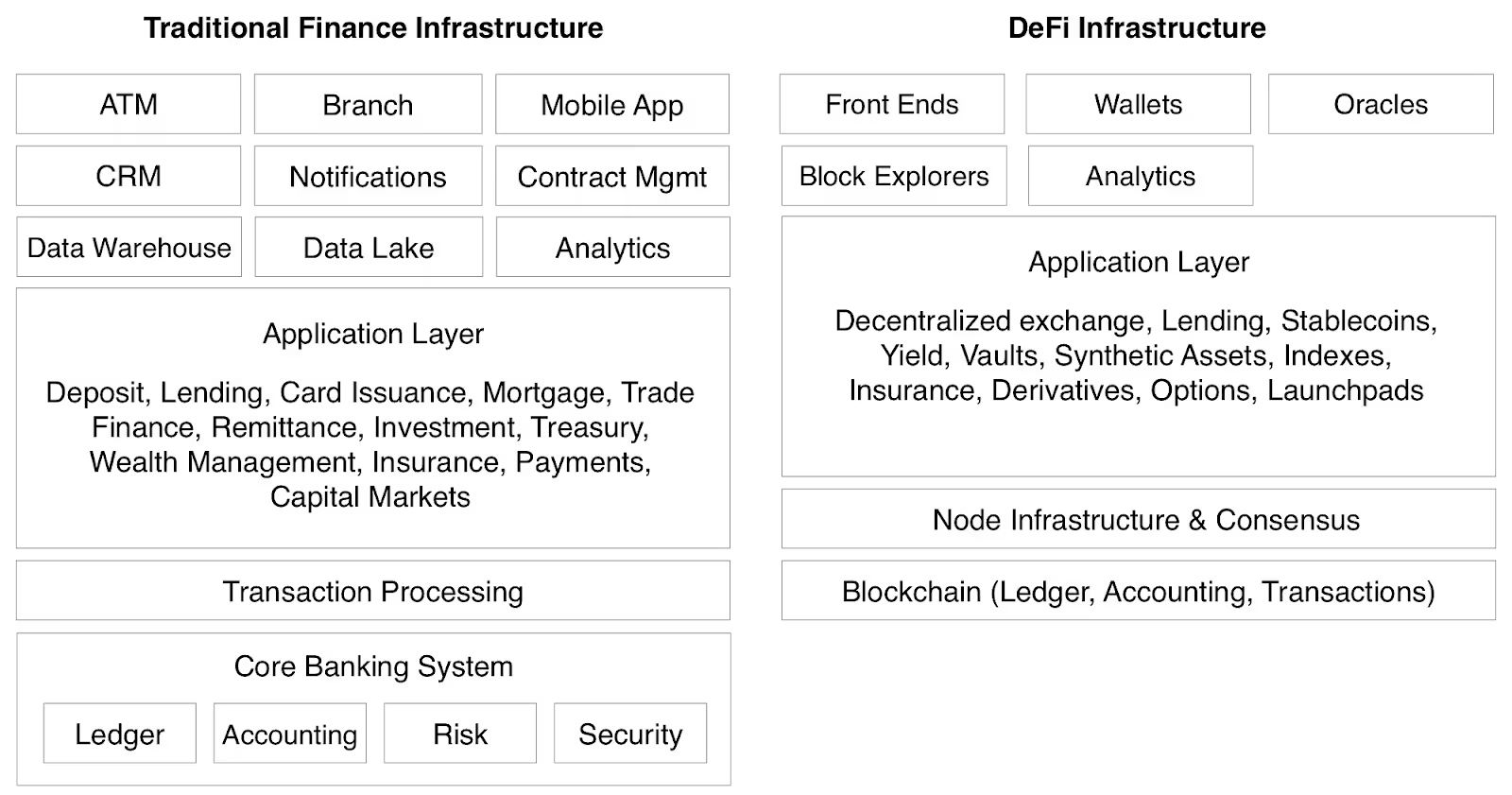

The modern DeFi stack: What’s under the hood?

DeFi infrastructure is a layered performance system.

- At the ingress, RPC endpoints determine request latency, reliability, and burst tolerance; saturated or rate-limited gateways cascade into failed transactions and slippage.

- Indexing layers transform raw chain data into a queryable state; the breadth of indexed entities and freshness windows drive UX responsiveness for portfolios, risk engines, and oracles.

- Analytics adds behavioral, risk, and operational insights; real-time telemetry and anomaly detection shorten incident MTTR and inform routing, fee strategies, and market-making logic.

- Monetary & collateral layer (stablecoins + RWAs) anchors the rest of the stack. Reserve-backed stablecoins and tokenized treasuries now function as the default settlement, margin, and treasury rails for DeFi credit, perps, and liquidity pools.

Your infra provider’s support for high‑quality price feeds, treasury token data, and RWA proof‑of‑reserves directly affects liquidation safety, leverage limits, and regulatory reporting. Head‑block lag, stale pricing, or inconsistent views of stablecoin pegs at this layer can cascade into bad debt, failed liquidations, or compliance gaps. - MEV protection governs transaction ordering and leakage; private mempools, bundle relays, and sim-based pre-trade checks reduce sandwich and backrun risk, improving execution quality.

- Cross-chain infrastructure (bridges, intent routers, message layers) manages liquidity mobility and state consistency; guarantees, latency, and failover policies directly affect capital efficiency and user trust.

- Monitoring and observability tie the stack together with SLOs, traces, and alerts; proactive degradation detection avoids TVL, peg, or liquidation shocks.

Practically, this resembles an always-on, high-frequency financial backbone: microseconds and determinism matter, routing must be adaptive, and safety rails must be default-on.

Winning teams treat infra as a quant discipline—measure, simulate, and re-route—so traders, liquidators, and end users experience consistent, low-variance performance across volatile conditions.

Top 5 infra capabilities for DeFi that will matter most in 2026

- MEV-aware execution quality

– Private mempools, solver integrations, predictable inclusion, low p99.

– Used by: perps, DEXs, liquidators, intent systems. - Stablecoin and RWA correctness guarantees

– Proof-of-reserves freshness, treasury token pricing, peg deviation alerts.

– Used by: stablecoin issuers, lenders, treasuries, RWAs. - Rollup/appchain-aware RPC

– Sequencer co-location, DA-layer sync, minimal L2 lag.

– Used by: intents, perps, appchains, high-throughput protocols. - Cross-chain observability + state consistency

– Bridge indexing, fast reorg handling, multi-chain watchers.

– Used by: routers, aggregators, cross-chain bridges, unified liquidity apps. - Elastic surge capacity

– Handles 10x/100x short bursts without collapsing or throttling users.

– Used by: All DeFi, especially perps, AMMs, stablecoins during volatility.

So, who’d you gonna call to get all these features? DeFi infrastructure providers!

DeFi infrastructure providers in 2026: what teams actually need (and why)

In 2026, choosing an infrastructure provider is less about raw RPC access and more about execution quality, multi-chain scale, stablecoin/RWA data integrity, and resilience under volatile load.

Key 2026 features and which projects require them

DeFi projects now operate across L1s, L2s, appchains, and modular stacks; they rely on private mempools, solver networks, and RWA-proof feeds; they require consistent performance for perps, liquidations, intents, bridges, and stablecoin operations. The provider you choose determines how predictable these systems remain in high-stress windows.

DeFi providers’ comparison and overview

Let’s quickly look at what stands behind those most popular names you’ve heard. Focus is on what each provider is realistically strong at this year.

Note: For more precise information about your current project and requirements, be sure to contact providers directly!

What this means for teams implementing DeFi in 2026

- If you rely on execution quality (perps, DEXs): Pick a provider with private routing, MEV-aware endpoints, and excellent p95/p99 latency.

- If you rely on RWAs or stablecoins: You need archive correctness, proof-of-reserves ingestion, and perfect chain consistency across POPs.

- If you’re building intents, appchains, or L2-native systems: Choose a provider with sequencer adjacency and DA-aware routing.

- If you’re multi-chain: Prioritize indexing freshness, bridge proofs, and predictable cross-chain message tracking.

- If your system surges: Demand clear burst policies, dedicated clusters, and isolation guarantees.

Vendor-neutral verification checklist for choosing the DeFi infra provider

This is a comprehensive set of questions and indicators to collect from DeFi infrastructure providers. Compare them to make the right choice for your project.

Workload profile and test harness

- Define method mix and weights for your app: eth_call (hot paths), eth_getLogs (range scans), trace_, debug_, getBalance, getBlockByNumber, mempool queries, and write flows (sendRawTransaction/private tx).

- Specify concurrency and burst shapes: steady state, 10x surge, 100x micro-bursts (e.g., token listings, volatile windows).

- Regions in scope: the exact POPs your users hit (e.g., FRA, LHR, SFO, IAD, SGP, NRT).

- Provide a reproducible harness: same wallet set, same block ranges, same filters, identical retry/backoff.

Latency and throughput SLOs

- Request p50/p95/p99 for each method in each region at N concurrent clients; include time-to-first-byte and full response time.

- Document max sustainable RPS and behavior when exceeding limits (throttle code, backoff headers, error classes).

- Suggested targets to ask for: regional p95 read under 250 ms for hot methods; p95 getLogs (24h window) under 800 ms; p99 write path acceptance under 300 ms with private tx.

Rate limits and burst handling

- Hard and soft limits by plan; elastic headroom policy; documented 429/5xx shapes; retry-after headers; fairness under contention.

- Isolation options: dedicated clusters, per-endpoint isolation, noisy neighbor protections.

Availability, failover, and multi-cloud/region

- Uptime SLA by product; real-time failover policies across regions and clouds; control over region pinning vs anycast.

- Health-check and circuit-breaker guidance for clients; documented RTO/RPO for outages.

- Evidence of chaos testing and the last three failover drills; customer-facing runbooks.

Data correctness and freshness

- Head block lag distributions; reorg handling policy and max reorg depth tolerated.

- Consistency across POPs (read-after-write, mempool parity); archive accuracy tests (state proofs or merkle checks). For RWA and stablecoin-heavy systems, this includes consistent views of treasury token price feeds, RWA proof‑of‑reserves updates, and stablecoin peg deviations across regions and endpoints.

- For indexing APIs/subgraphs: freshness windows, backfill speed, and late data handling. Verify that indexers used for RWAs and stablecoins expose timely coupon payments, rebases, and collateral composition changes so your risk and reporting stacks never operate on stale collateral data.

MEV protection and transaction privacy

- Private tx support: relay partners (e.g., Flashbots/Bloxroute/Taichi), bundle support, simulated fills, frontrun/sandwich protections.

- Visibility guarantees (who sees my tx before inclusion), inclusion latency stats, and fallback to public mempool behavior.

- Configurable slippage guards, nonce management, and stuck-tx automation.

Observability and analytics

- Per-endpoint logs, traces, and request metadata export (headers; correlation IDs); retention and export formats.

- Real-time dashboards for latency/errors/throughput; webhooks/alerts for SLO violations; spend and quota analytics.

Security and compliance

- Attestations: SOC 2 Type II, ISO 27001, penetration test summaries, and key management practices.

- DDoS posture, WAF, bot mitigation; customer IP allowlists/VPN/privatelink options.

- Data residency options and lawful intercept posture; sanctions policy affecting RPC methods.

Change management

- Notice periods for breaking changes, chain upgrades, and client version bumps; backward compatibility policies.

- Staging/sandbox parity with production; deterministic version pinning per endpoint.

Support and incident response

- MTTA/MTTR by severity; staffed hours and escalation paths (Slack/Telegram/on-call).

- Postmortem commitment and circulation timelines; status page transparency and historical uptime.

Pricing and commercial terms

- Pricing unit and comparability (per request vs compute units vs credits); overflow pricing; egress/log export costs.

- Overage behavior, throttling vs billing only; ability to set hard caps with alerts.

- RWA and compliance‑heavy workloads often generate large volumes of historical and archive queries (e.g., multi‑year coupon payment histories, treasury token transfers, and proof‑of‑reserves snapshots). Clarify pricing for:

- High‑volume archive reads used for regulatory reporting and audits;

- Periodic roll‑ups of coupon or yield payments across thousands of addresses;

- Bulk exports of transaction and balance history for RWA investors or treasuries.

- Ask providers whether they offer discounted tiers or dedicated SKUs for these “slow but heavy” data‑egress patterns so your stablecoin/RWA reporting stack doesn’t unexpectedly dominate your infra bill.

Roadmap fitness (next 6–12 months)

- Chains and POPs to be added; MEV/private tx roadmap by chain; indexer improvements; dedicated cluster SKUs.

Side-by-side validation plan you can run in a week

Synthetic probes

Probe harness config (JSON)

Use this to drive synthetic probes across the mentioned DeFi infrastructure providers, methods, and regions. Replace placeholders with your endpoints and adjust weights to your real method mix.

{

"version": "1.0",

"description": "DeFi RPC provider benchmarking harness",

"providers": [

{

"name": "alchemy",

"endpoints": {

"ethereum_mainnet": "https://eth-mainnet.g.alchemy.com/v2/ALCHEMY_KEY",

"arbitrum_one": "https://arb-mainnet.g.alchemy.com/v2/ALCHEMY_KEY"

}

},

{

"name": "infura",

"endpoints": {

"ethereum_mainnet": "https://mainnet.infura.io/v3/INFURA_KEY",

"optimism": "https://optimism-mainnet.infura.io/v3/INFURA_KEY"

}

},

{

"name": "quicknode",

"endpoints": {

"ethereum_mainnet": "https://example-eth.quiknode.pro/QUICKNODE_KEY/",

"polygon_pos": "https://example-polygon.quiknode.pro/QUICKNODE_KEY/"

}

},

{

"name": "chainstack",

"endpoints": {

"ethereum_mainnet": "https://nd-XXXXXX.chainstacklabs.com/v1/KEY",

"base": "https://nd-XXXXXX.chainstacklabs.com/v1/KEY"

}

},

{

"name": "blockdaemon",

"endpoints": {

"ethereum_mainnet": "https://svc.blockdaemon.com/ethereum/mainnet/KEY",

"arbitrum_one": "https://svc.blockdaemon.com/arbitrum/one/KEY"

}

},

{

"name": "ankr",

"endpoints": {

"ethereum_mainnet": "https://rpc.ankr.com/eth/ANKR_KEY",

"bnb": "https://rpc.ankr.com/bsc/ANKR_KEY"

}

},

{

"name": "rpc_fast",

"endpoints": {

"ethereum_mainnet": "https://eth-mainnet.rpcfast.com/v1/RPCFAST_KEY",

"base": "https://base-mainnet.rpcfast.com/v1/RPCFAST_KEY"

}

}

],

"regions": [

{ "name": "us-east-1", "cloud": "aws" },

{ "name": "us-west-1", "cloud": "aws" },

{ "name": "europe-west3", "cloud": "gcp" },

{ "name": "ap-northeast-1", "cloud": "aws" },

{ "name": "ap-southeast-1", "cloud": "gcp" }

],

"workload": {

"methods": [

{ "method": "eth_blockNumber", "weight": 0.05 },

{ "method": "eth_getBalance", "weight": 0.05, "params_template": ["$WALLET", "latest"] },

{ "method": "eth_call", "weight": 0.35, "params_template": ["$CALL_OBJ", "latest"] },

{ "method": "eth_getLogs_1h", "weight": 0.2, "method_override": "eth_getLogs", "params_template": ["$LOG_FILTER_1H"] },

{ "method": "eth_getLogs_24h", "weight": 0.1, "method_override": "eth_getLogs", "params_template": ["$LOG_FILTER_24H"] },

{ "method": "trace_block", "weight": 0.1, "chain_support": ["ethereum_mainnet"] },

{ "method": "debug_traceTransaction", "weight": 0.05, "sample_from_recent_tx": true },

{ "method": "eth_sendRawTransaction", "weight": 0.05, "signed_tx_template": "$SIGNED_TX" }

],

"concurrency": {

"steady_rps": 50,

"per_provider_limit": 200,

"per_region_limit": 200

},

"schedule": {

"interval_seconds": 60,

"duration_hours": 168,

"bursts": [

{ "label": "10x_burst", "multiplier": 10, "duration_seconds": 300, "repeat_minutes": 60 },

{ "label": "100x_microburst", "multiplier": 100, "duration_seconds": 30, "repeat_minutes": 180 }

]

}

},

"timeouts_and_retries": {

"connect_timeout_ms": 500,

"read_timeout_ms": 2000,

"max_retries": 2,

"retry_backoff_ms": 200,

"circuit_breaker": {

"error_rate_threshold": 0.05,

"rolling_window_seconds": 60,

"open_duration_seconds": 20

}

},

"validation": {

"head_lag_tolerance_blocks": 1,

"reorg_depth_tolerance": 2,

"response_schema_checks": true

},

"metrics": {

"latency_percentiles": [50, 95, 99],

"record_ttfb": true,

"error_classes": true,

"throttle_detection": { "http_429": true, "headers": ["Retry-After", "X-RateLimit-Remaining"] },

"log_fields": ["provider", "region", "chain", "method", "status", "http_code", "ttfb_ms", "latency_ms", "resp_bytes", "block_lag", "error_type"]

},

"outputs": {

"stdout": true,

"ndjson_path": "./results/rpc_benchmark.ndjson",

"prometheus": { "enable": true, "port": 9090 },

"grafana_dashboards": { "export_path": "./dashboards" }

},

"secrets": {

"wallets": ["0xWALLET1", "0xWALLET2"],

"call_objects": ["$ERC20_BAL_CALL_OBJ", "$VAULT_CALL_OBJ"],

"log_filters": {

"1h": { "fromBlock": "$HEAD_MINUS_300", "toBlock": "latest", "address": ["$CONTRACT_ADDR"], "topics": ["$TOPIC0"] },

"24h": { "fromBlock": "$HEAD_MINUS_7200", "toBlock": "latest", "address": ["$CONTRACT_ADDR"], "topics": ["$TOPIC0"] }

},

"signed_tx_samples": ["0xSIGNED_TX_SAMPLE"]

}

}Realistic load replay

Surge and failure drills

Acceptance thresholds (example)

Why RPC Fast?

The main reason for you to try our DeFi infrastructure service pack is that we built our service based on the analysis of other providers and are taking the best of them, filling their gaps with our expertise.

We recommend including RPC Fast in that bake-off and evaluating it against your target chains and regions; keep whichever provider consistently meets your SLOs at the lowest operational risk and total cost.

.svg)

.svg)

.svg)