On Solana, the wrong data pipeline shows up as missed fills, late liquidations, or indexers that fall behind and never catch up. The problem is not “which RPC is fastest.” The problem is choosing where you want to sit in the Solana data path.

You have three options that look similar on paper but behave very differently in production.

- Standard JSON-RPC and WebSockets;

- Yellowstone gRPC (Geyser);

- ShredStream.

After spending some time reading this article, you’ll get rid of doubts about what streaming approach you need and where you should start your optimization journey.

And if you’re evaluating RPC Fast as a provider for nodes or managed services, treat this as a stack decision, not a vendor decision.

The problem: you are optimizing the wrong thing

Most teams start with a “faster endpoint” to generate the biggest value, as they think. This approach fails fast, better before the budget drains completely. The bigger issues that cause this usually sit elsewhere:

- Latency variance, not average latency.

- Uptime gaps during spikes, not steady-state load.

- Cost blowups from polling, retries, and backfills.

- Operational risk from fragile streaming clients.

Solana makes this worse because high throughput compresses your reaction window. A 200 ms slip is not a rounding error. It is often the difference between “first in slot” and “too late.” To spot and react to all key events and keep your project up with detailed observation of the whole Solana, you have to use these extra tools.

Explaining each streaming option

Standard RPC (JSON-RPC plus WebSockets)

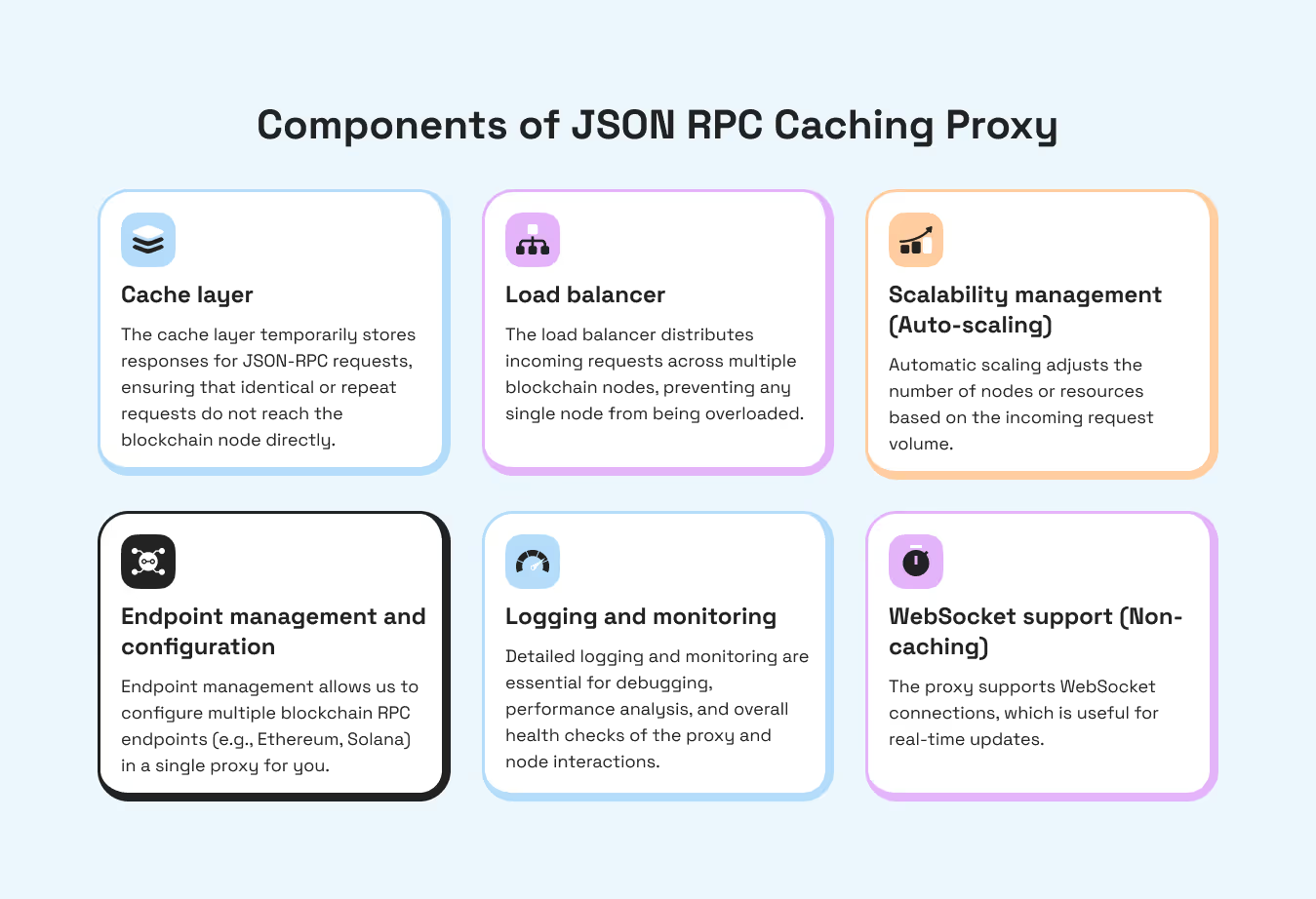

This is the baseline. You poll over HTTP or subscribe over WebSockets to logs, accounts, signatures, slots, or blocks. It is easiest to integrate and easiest to debug. It also pushes compute and bandwidth costs onto you when you scale.

The basic setup goes through the usual evolution: poll with getProgramAccounts, move to WebSockets, then hit filtering and scaling limits as production load rises, leading teams to gRPC streaming via Geyser plugins like Yellowstone.

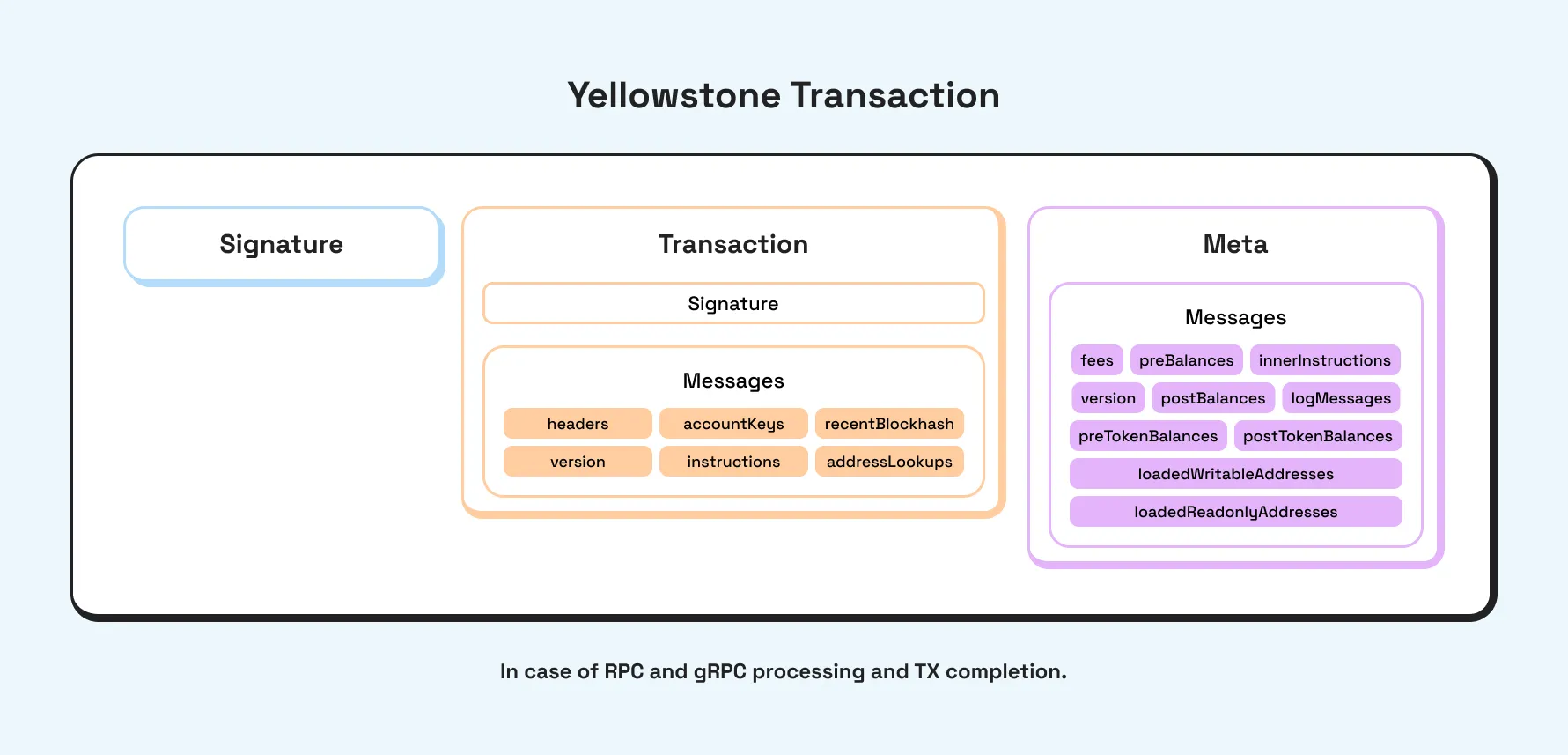

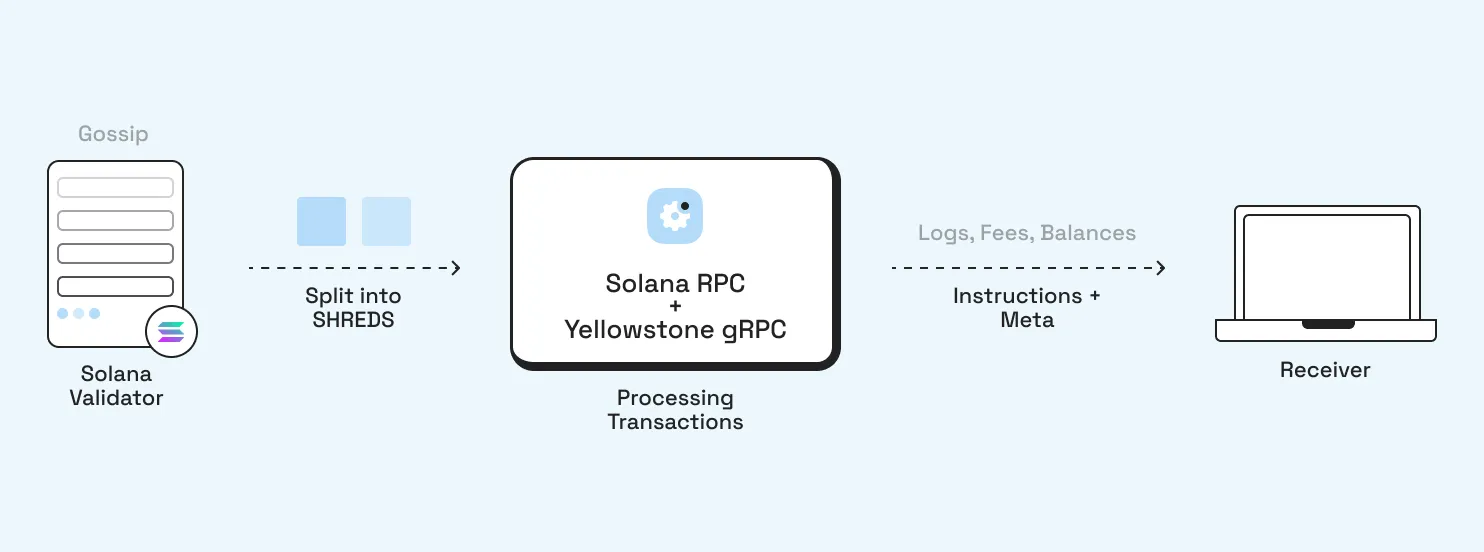

Yellowstone gRPC (Geyser)

Yellowstone is an open source gRPC interface built on Solana’s Geyser plugin system. It streams accounts, transactions, entries, blocks, and slots over gRPC, with server-side filters. We would describe the value as “ultra-low latency streaming” with advanced filtering and multiple stream types.

Implementation-level details, the reference repo documents filters, ping keepalives, and how blocks are reconstructed are here.

ShredStream

ShredStream is a lowest-latency feed of Solana leader data, delivering shreds as they are produced. Jito positions it as “lowest latency shreds from leaders.”

The critical difference: ShredStream is earlier in the pipeline. It is closer to raw block production, which improves time-to-first signal. It also increases the parsing burden and correctness work on the client side.

Criteria that matter to CTOs and SRE leads

Latency reduction

- Standard RPC: Latency depends on provider, region, and polling cadence. WebSockets help, but are still JSON-heavy and filter-light.

- Yellowstone: Binary gRPC streams plus filters remove a lot of wasted traffic and reduce end-to-end reaction time.

- ShredStream: Earliest bytes. Best for time-sensitive detection.

Uptime and data continuity

- Standard RPC: Polling plus provider rate limits leads to thundering herds and dropped requests during market spikes.

- Yellowstone: You run one long-lived stream, with explicit keepalive patterns (the Yellowstone client supports

pingfor idle connection survival behind load balancers). - ShredStream: Uptime depends on your feed provider and your ability to handle gaps, dedupe, and reassembly.

Cost control

The biggest cost drivers are not per-request pricing. They are engineering hours, backfills, and wasted bandwidth.

- Standard RPC inflates cost when you poll large account sets or re-fetch historical data due to missed events.

- Yellowstone reduces wasted bytes using filters and mechanisms like account data slicing.

- ShredStream reduces reaction latency, but increases build cost because you own more of the decoding pipeline.

High availability

HA is a product of redundancy plus clean failure modes.

- Standard RPC: Easiest to fail over between providers because the interface is consistent.

- Yellowstone: You need multi-region streams and reconnect logic, but you can run active-active subscribers and dedupe downstream.

- ShredStream: HA means combining feeds or pairing with Yellowstone or Standard RPC to validate and backfill.

Use cases overview

Standard RPC

- Wallets and consumer apps where user experience is more important than first-slot reaction;

- Admin dashboards, alerting, and ops tooling;

- Low-rate bots that do not parse full program flows in real time.

Yellowstone gRPC

- Indexers and analytics pipelines that need reliable, filtered streams of transactions and account updates;

- Risk engines and monitoring where throughput and filtering matter more than absolute earliest bytes;

- Backends that benefit from binary streams and typed schemas.

ShredStream

- HFT and latency races where the earliest detection is the product;

- MEV-aware systems doing custom decoding and simulation;

- Teams with the engineering maturity to own reassembly, dedupe, and correctness layers.

Micro-case, using RPC Fast benchmarks

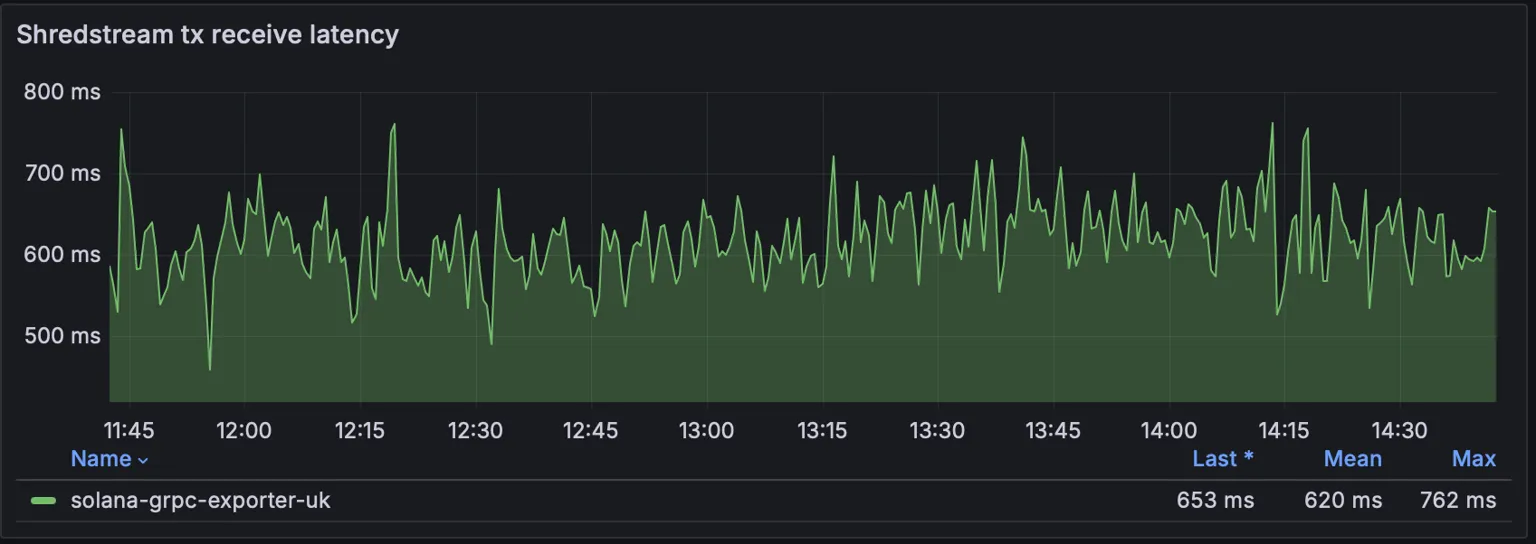

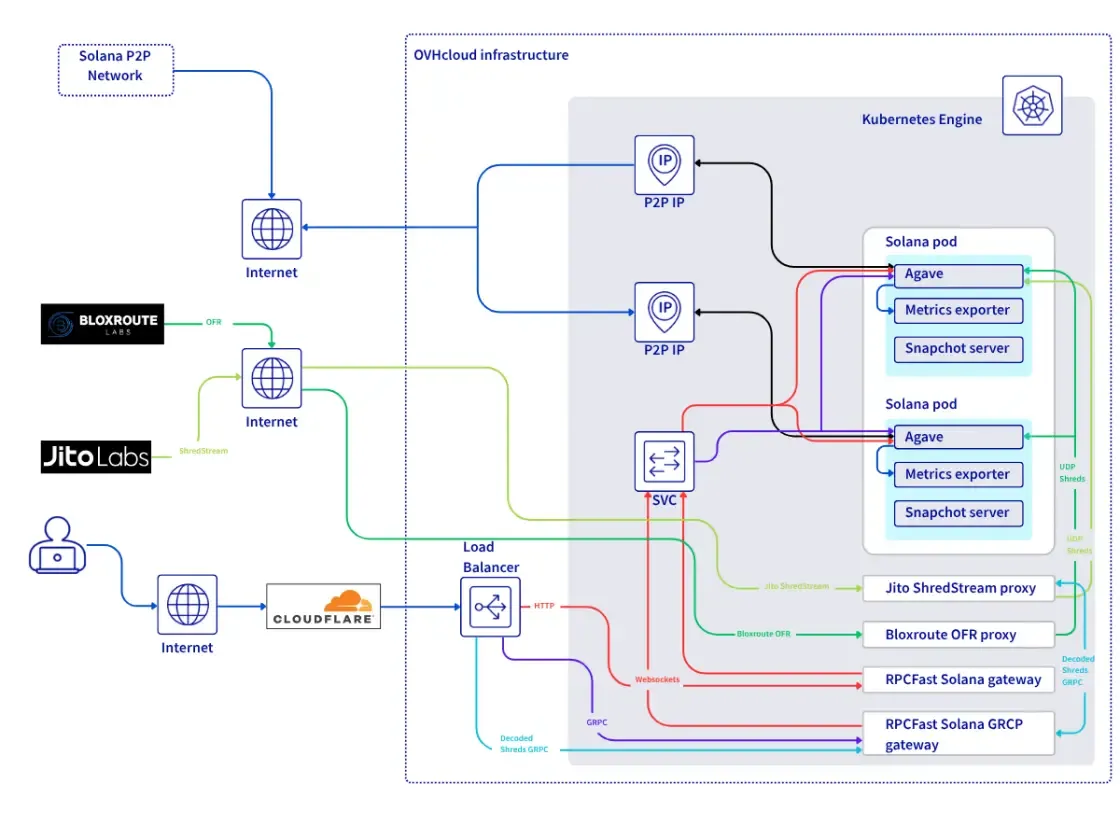

RPC Fast nodes using Jito ShredStream improved transaction arrival time on Yellowstone gRPC by an average of 120 ms, with a 99th percentile gain of around 270 ms, across 185k matching transactions in one published summary.

Feature comparison table

Recommended tool combinations and when to use them

Combination A: Standard RPC only

Use when:

- You need fast integration, basic subscriptions, and easy provider swapping.

- You accept higher latency and occasional missed events under load.

Combination B: Yellowstone gRPC plus Standard RPC

This is the default architecture for most production indexers. Use when:

- You need reliable streaming for real-time ingestion plus ad hoc reads.

- You want streaming for “what changed” and JSON-RPC for “fetch details on demand.”

Combination C: ShredStream plus Yellowstone gRPC

Choose this option if you need the earliest detection plus structured filtered streams for downstream logic. It’s like the best practice already: pairing ShredStream with Yellowstone to get earlier updates with fewer gaps, since Yellowstone benefits from faster underlying shred arrival.

Combination D: ShredStream plus Standard RPC

Use this combo in case you want the earliest signal, but you validate, enrich, and backfill using JSON-RPC. This works well for trading stacks where correctness and observability need a second source of truth.

Implement your flows wisely

So here’s what we have to sum up. Standard RPC is a convenience interface. Yellowstone gRPC is a scalable streaming interface. ShredStream is for the earliest possible signal feed.

The right answer is rarely “pick one.” Most production teams run a combination:

- One stream for speed.

- One interface for correctness and enrichment.

- One operational plan for failover and backfill.

.svg)

.svg)

.svg)