In Solana-based trading, timing is dominant. Over 2.2 million daily active wallets are transacting on the Solana network, and competition for block space is brutal. Every millisecond matters. Whether it’s arbitrage, liquidation, or MEV, your transaction needs to land in the right slot, or it’s too late.

But here’s the catch: most trading bots are hosted far away from their Solana RPC nodes. That physical gap adds unpredictable latency, packet loss, and confirmation delays. Even if your code is lightning-fast, your infrastructure might be the bottleneck.

In this article, we'll explain why colocating your trading bot and Solana RPC node within the same data center or network is crucial. We’ll show how proper placement cuts latency by 5–10×, improves transaction inclusion rates, and gives you the consistency Solana’s slot-based design demands.

Why latency matters in Solana trading

The network operates at blistering speed, with a slot time of ~400ms, meaning validators rotate every 0.4 seconds to produce new blocks. That gives traders a very narrow window to submit and confirm transactions. If you miss it, you’re out.

Let’s break it down through real strategies:

Arbitrage

Imagine you spot a price gap between two DEXes on Solana. Your bot must submit a transaction faster than others exploiting the same gap. If there’s 100–150ms latency between your trading system (e.g., hosted in Frankfurt) and your Solana RPC (e.g., in US-East), that round trip alone can eat 25–35% of the slot time—before the RPC even forwards the transaction to the validator.

The result? You miss the active leader slot, and your arbitrage leg gets front-run or ignored.

Front-running (pre-confirmation order flow)

Some strategies rely on reacting to pending transactions visible in the mempool or propagated via block builders like Jito. These trades must land within the same or next slot to front-run effectively. Delayed submission means your transaction queues up behind others or worse, lands after the opportunity disappears. Even microbursts of 50ms jitter can break your edge when slots rotate every 400ms.

Liquidations

Protocols like margin lending rely on liquidation bots to close under-collateralized positions. It’s a race. The first to submit the liquidation TX gets the bounty. But with cross-region latency, your bot may already be at a disadvantage. In peak congestion (common during market crashes), Solana’s mempool fills fast, and only transactions with low latency + priority fees get through.

Real-world impact

A 150ms round-trip delay (typical between Europe and US) often means your transaction misses the current slot, forcing you to wait for the next one—or worse, two slots ahead due to congestion or validator churn. That’s a guaranteed disadvantage in any latency-sensitive scenario.

And remember: Solana doesn’t wait. Validators execute as soon as they receive a full block entry, without waiting for slower nodes to catch up. If your RPC or bot is behind—even by a fraction of a second—your transaction gets sidelined.

What co-location actually means

In the context of Solana trading infrastructure, co-location means hosting your trading bot and Solana RPC node in the same physical data center, ideally on the same bare-metal server or within a low-latency LAN segment. It eliminates geographic hops, slashes network jitter, and minimizes TCP/UDP traversal delays, which are mission-critical on a 400ms slot chain.

Latency on Solana isn’t just about global round-trip time (RTT). It’s about the number of hops and queuing delays between three components:

- Trading logic (where the decision is made)

- RPC endpoint (which signs/forwards the transaction)

- Validator TPU (Transaction Processing Unit, where the block is built)

Each hop introduces microseconds to milliseconds of delay, especially under load. At HFT speeds, even 1ms jitter can push a transaction outside the current slot window—effectively invalidating it for front-running, liquidation, or arbitrage.

Cloud VMs introduce virtualization overhead, noisy neighbors, shared NICs, and unpredictable CPU throttling. In contrast, bare-metal servers offer:

- Dedicated CPU cores and PCIe bandwidth

- Direct access to 10–25 Gbps NICs (InfiniBand or low-jitter Ethernet)

- Zero hypervisor scheduling delays

In tests by latency-focused teams using co-located bare metal in Equinix NY5 and Frankfurt FR5, sub-30ms RPC ↔ validator ↔ trader latency was achieved across the full path—including signing, forwarding, and confirmation tracking (source: Packet.com latency benchmarks).

Validator-adjacent RPC access

To achieve true slot-level performance, your RPC node must be as close as possible to a current block producer. There are three strategies:

a) Peered RPC and Validator in Same DC

Set up a non-voting validator node alongside your RPC in the same rack. This node syncs with the cluster and forwards transactions directly to the current leader's TPU via QUIC or Turbine. This avoids gossip relay latency and allows near-zero hop TX propagation.

b) Staked RPC with Stake-Weighted QoS

A staked RPC node (running on a validator identity with delegated stake) can inject TX packets with stake-based priority into the Solana QUIC pipeline. As described in Solana’s SWQoS spec, this gives up to X% of network bandwidth to your identity, where X = your validator’s stake share. This drastically reduces TX drop probability under congestion.

c) Direct TPU Access via Jito/QUIC Proxy

For setups that integrate Jito Labs infrastructure, RPC nodes can forward bundles and single TXs directly to the validator’s Transaction Processing Unit (TPU), skipping mempool propagation altogether. According to Jito’s block builder docs, TPU-bound TXs confirmed within the same slot often see <50ms confirmation latency, enabling high-speed arbitrage and MEV capture.

Co-location implementation: Best practice snapshot

A well-architected stack achieves:

- End-to-end TX submission → block inclusion: ~20–35ms

- Account update subscription latency: ~10–15ms

- Zero dropped transactions under 6,000 TPS load

Cloud regions and physical distance

When it comes to Solana trading infrastructure, physical distance isn’t just geography—it’s latency, slot timing, and risk of transaction failure. Choosing the wrong cloud region can instantly add +100ms or more to every critical operation, pushing your bot a full slot behind the market.

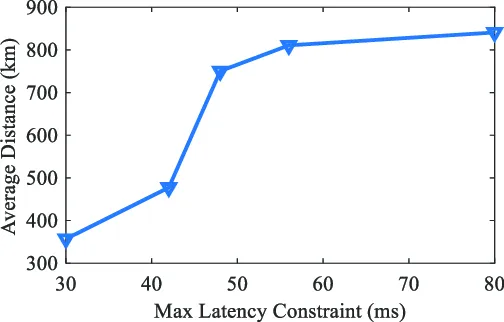

Latency benchmarks across major regions

Here’s a measured snapshot of round-trip latencies between common cloud regions and Solana validator clusters (measured via ICMP/ping + TCP RTT via RIPE Atlas and cloud traceroutes):

At Solana’s 400ms slot time, even 50ms of added latency means missing the opportunity to hit the current leader—especially under load. 150ms+ RTT (like from APAC to EU) essentially makes real-time arbitrage or priority-based MEV infeasible.

BGP routing and unexpected latency

One common pitfall is assuming cloud regions are always “close” because of city names. But BGP (Border Gateway Protocol) routing doesn’t always follow geographic logic. For example:

- Hetzner’s Ashburn servers may exit via EU peering points due to limited US upstreams, adding >100ms latency to local US destinations.

- Cloudflare Anycast might route a user from Berlin to a validator in London via Paris, depending on peering agreements and current load.

- AWS and GCP both run virtual zones that span physical data centers. eu-central-1a might land your instance in a different building from eu-central-1b, with ~2–5ms of cross-zone latency.

Packet loss also becomes a real risk on transcontinental routes. Even 0.5% packet loss on UDP streams (like QUIC → TPU) means unreliable transaction propagation under congestion—a critical issue for Solana validators.

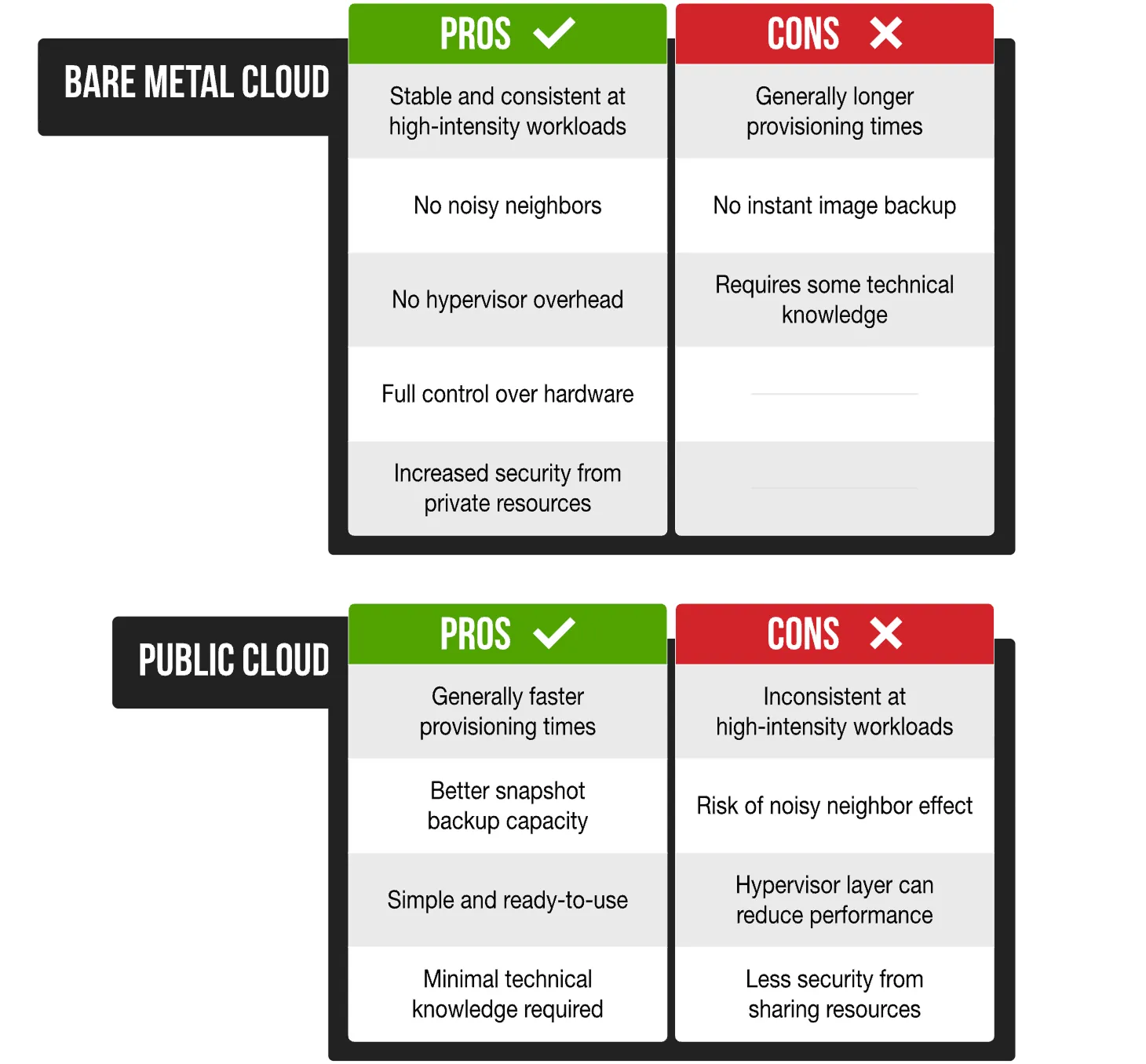

Bare metal vs. Cloud for co-location

When it comes to latency-critical Solana trading infrastructure, bare-metal servers offer one decisive advantage: network determinism. With dedicated hardware, there's no noisy neighbor effect, no hypervisor contention, and full control over NIC tuning, CPU pinning, and I/O isolation. In real benchmarks, bare-metal instances consistently outperform cloud VMs in P99 tail latency, even when mean latency appears similar.

High-frequency trading (HFT) teams typically opt for colocated bare-metal deployments near validator nodes to shave off tens of milliseconds in critical execution paths. Bare-metal also enables direct BGP peering, jumbo frame tuning, and ultra-low-latency kernel configurations (e.g., using net.core.busy_poll, IRQ affinity settings, and dpdk stack bypass).

However, co-location is possible in cloud environments too—if you know what you're doing:

- Choosing the right availability zone (e.g., AWS eu-central-1a is closer to certain Solana clusters than 1c)

- Using compute-optimized instances (like AWS c7n or GCP C3)

- Avoiding noisy shared networks by selecting dedicated ENA- or GVE-enabled NICs

- Leveraging placement groups and cluster networking modes for intra-region proximity

Some hybrid approaches even colocate the latency-critical path (RPC proxy + TPU forwarder) on bare metal, while running stateless services (indexers, metrics, health checks) in the cloud.

Container orchestration adds another layer of complexity. While Kubernetes offers operational scale and observability, it can introduce 10–50ms jitter due to pod rescheduling, CNI abstraction, or cloud autoscaler delays. In contrast, Docker Compose or raw systemd services on bare metal often yield more stable latency profiles—albeit with fewer devops luxuries. For real-time RPC workloads, some teams pin critical containers directly to host CPU cores and bypass overlay networks entirely.

Bottom line: bare metal remains king for precision latency, but high-performance cloud setups—when carefully architected—can be viable for co-location with validator-aligned RPC.

Optimizing the transport layer

Once you've physically colocated your RPC with your trading engine, the next bottleneck is transport. On Solana, the race isn’t won with TCP alone.

QUIC is Solana’s transport of choice for transaction forwarding to validator TPU ports (Transaction Processing Units). Unlike TCP, QUIC offers:

- 0-RTT handshakes

- Built-in encryption and multiplexing

- Better congestion control on high-throughput paths

Critically, QUIC supports connection reuse and out-of-order packet delivery, which reduces retransmission delays. This is essential when targeting Solana's 400ms slot time—a 50ms delay in pushing to the wrong leader means waiting a full slot.

Beyond QUIC, advanced RPC infrastructure integrates direct UDP push into the validator TPU. Solana’s Turbine protocol propagates blocks as UDP shreds, and a latency-optimized RPC stack can inject transactions directly into the Turbine layer. This bypasses the gossip network, skipping seconds of potential propagation lag.

Slot-aware routing is another frontier. At RPC Fast, for example, our system dynamically detects the current leader slot (via vote tracking and validator telemetry) and routes high-priority transactions to that validator’s TPU via the lowest-latency transport. If the validator is in Frankfurt and your bot is colocated there, this path ensures <5ms delivery time—fast enough to beat global arbitrage bots still looping through shared public RPCs.

Advanced setups also support fallback relays and bundle-aware forwarding (e.g., via Jito), combining slot-awareness with MEV-friendly routing. This kind of routing precision is what separates real-time RPC from generic JSON-over-TCP solutions.

Final thought: Co-location isn’t optional—it’s infrastructure alpha

For latency-sensitive strategies on Solana, such as arbitrage, liquidations, and MEV execution, every millisecond counts. With ~400ms slot times and validators rotating every block, even a 100–150ms delay between your trading bot and the leader’s TPU can mean the difference between a filled order and a missed opportunity.

While Solana’s protocol is built for speed, your infrastructure determines your edge. Shared RPCs and misaligned cloud regions introduce invisible bottlenecks: stale data, late submissions, and unpredictable jitter. “Good enough” RPC access might work for NFT mints, but it fails under the real demands of DeFi automation.

At RPC Fast, we’ve seen this firsthand. Our clients, from HFT desks to programmatic liquidity providers, moved to validator-adjacent RPC clusters with dedicated routing and gRPC streaming.

In one case, RPC Fast deployed a hybrid setup for Mizar, an all-in-one CeFi+DeFi trading platform, spinning up 2× Base and 1× Solana node in under 3 days. By integrating Yellowstone gRPC and bloXroute/Jito relays, latency dropped significantly. With pre-synced spares and Grafana-based observability, node swaps now take ~15 minutes, enabling scale without disruption.

This is why we built RPC Fast as a service: to give Web3 teams and trading firms the performance foundation they need without the overhead of managing deep infrastructure.

.svg)

.svg)

.svg)