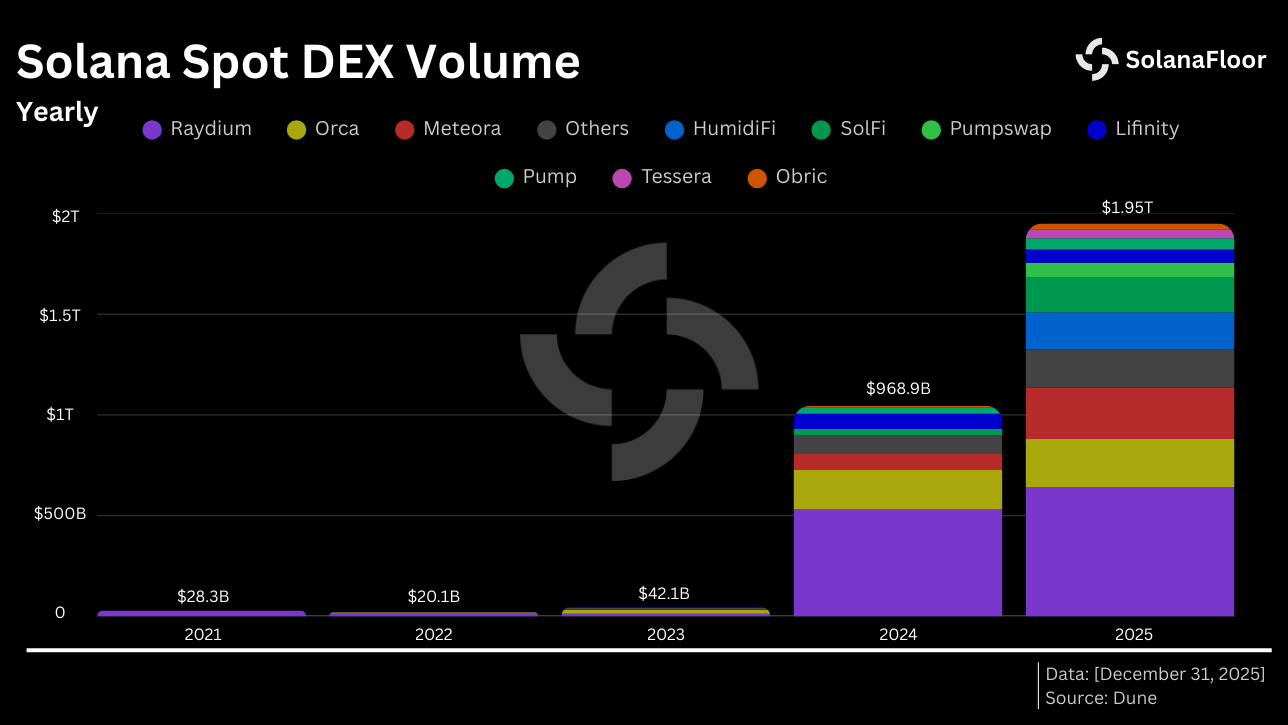

Solana processed over $1 trillion in DEX volume in 2025 alone—a nearly 400% increase from the year before. Network revenue exceeded $1.4 billion, with MEV capture accounting for over $720 million of that figure. At the same time, Firedancer went live on mainnet, Alpenglow passed governance vote with overwhelming validator support, and spot Solana ETFs launched in the U.S. and Europe.

These aren't just milestones for the network. They are signals that Solana's trading infrastructure has fundamentally matured. What was once an experimental high-speed chain is now the backbone for serious capital flows—from automated trading bots and institutional DeFi to real-time stablecoin settlements.

But here's the reality that doesn't make it into headlines: Solana's speed is only as good as the infrastructure you use to access it. A 400ms block time means nothing if your RPC lags by 3 slots, your transactions miss the target slot, or your data feeds arrive after the opportunity has already closed.

This guide breaks down every layer of Solana's trading infrastructure as it stands in 2026—from the protocol-level upgrades to the RPC stack, MEV tooling, data streaming, and the architectural decisions that separate competitive traders from everyone else.

The protocol layer: what changed and why it matters

Two upgrades define the current state of Solana's performance: Firedancer and Alpenglow.

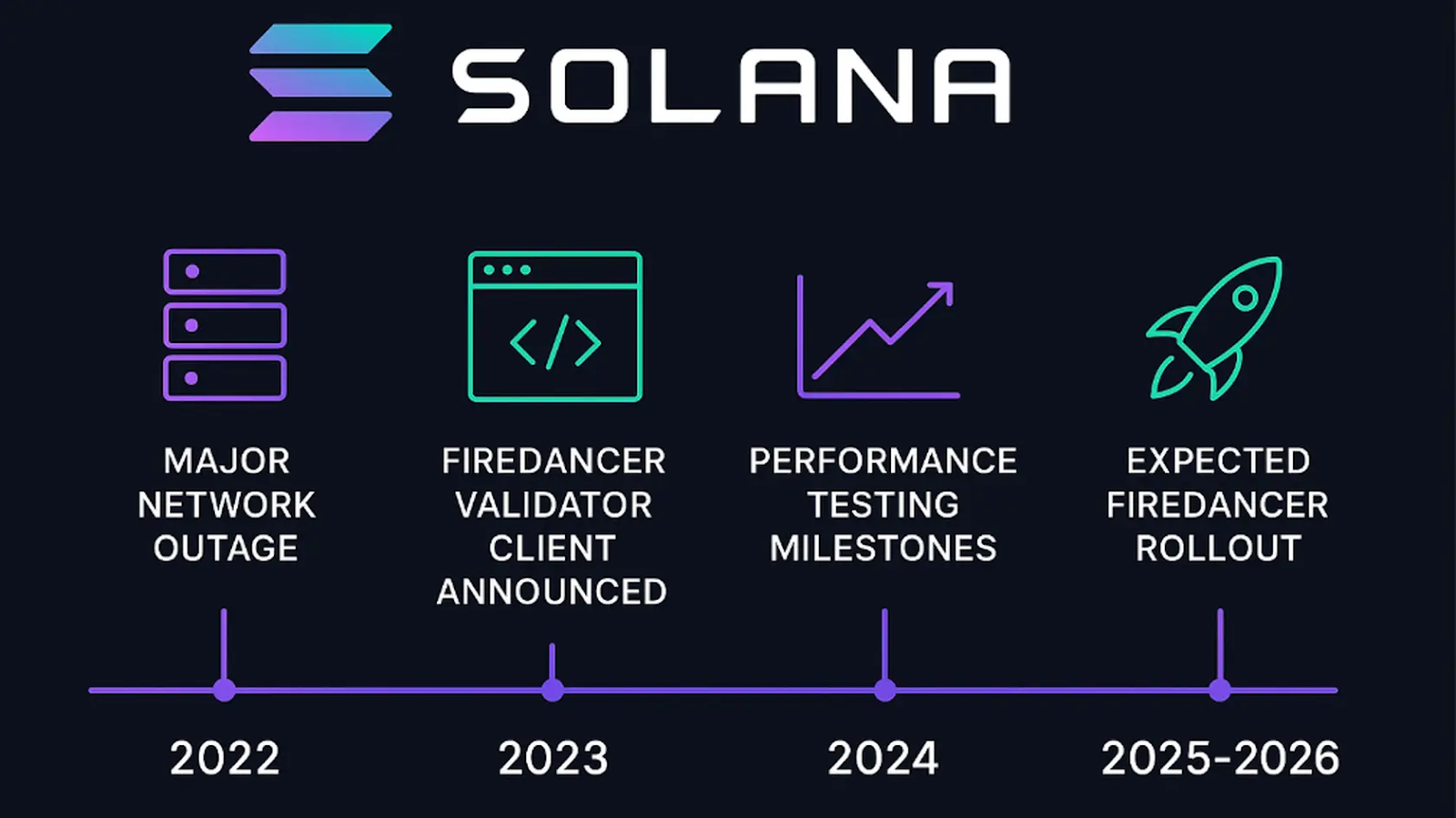

Firedancer is a validator client developed by Jump Crypto, written in C/C++ and designed to maximize hardware efficiency. Unlike the original Agave client (and its Jito-modified variant), Firedancer uses a modular, tile-based architecture that splits validator tasks into parallel processes. In internal benchmarks, it demonstrated throughput exceeding 1 million transactions per second on commodity hardware. As of the start of 2026, over 200 validators had adopted the Frankendancer hybrid variant, and the full client went live on mainnet in December 2025.

The impact for trading is twofold. First, client diversity reduces the risk of network-wide outages caused by a single codebase bug—something that plagued Solana in earlier years. Second, Firedancer's raw processing power means validators can handle significantly more transaction throughput without degradation, which directly benefits high-frequency and latency-sensitive operations.

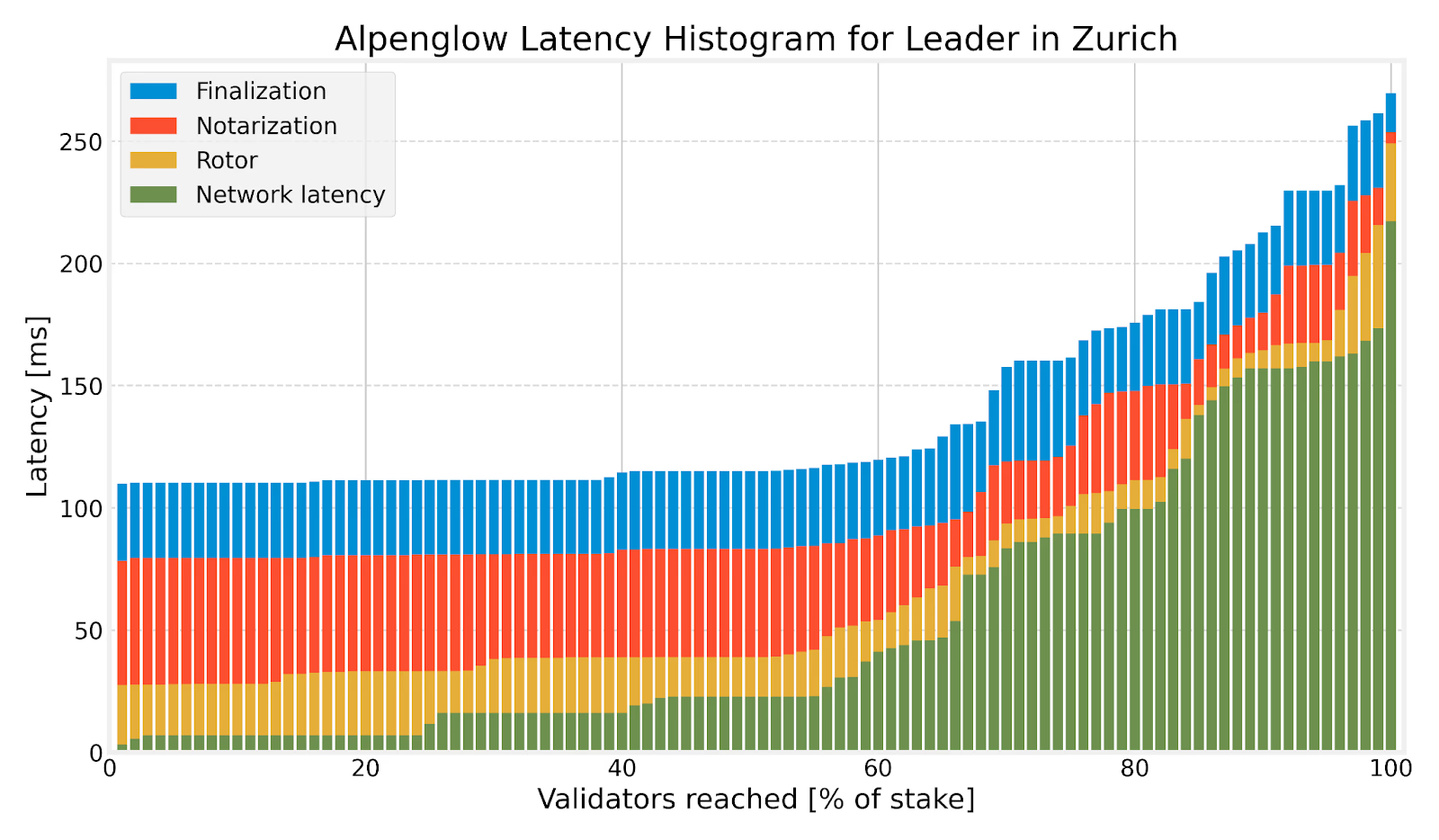

Alpenglow is the consensus-layer overhaul that replaces Solana's original Proof of History and Tower BFT mechanisms. It introduces two new components—Votor (a voting protocol using out-of-band, stake-weighted certification) and Rotor (a block propagation layer with stake-weighted relay paths). The result: finality drops from roughly 12 seconds to approximately 150 milliseconds, an 80x improvement. The upgrade also eliminates on-chain vote transactions, which historically consumed a large portion of block space and added operational costs for validators. Mainnet deployment is targeted for Q2 2026.

For traders, sub-200ms finality changes the game. High-frequency strategies, on-chain arbitrage, and liquidation bots can now operate with confirmation speeds that rival centralized exchanges. Stale blockhash errors—a common headache when RPC nodes lag—become far less likely when the chain itself finalizes faster.

The DEX ecosystem: where the volume lives

Solana's DEX landscape in 2026 is no longer dominated by a single venue. The ecosystem has diversified significantly, and understanding the trading venues is essential to building effective infrastructure around them.

Key platforms and their roles:

- Jupiter—the dominant aggregator, responsible for routing over 55% of all DEX volume in 2025 (exceeding $334 billion). It also leads in perpetual futures with $264 billion in perps volume. Jupiter captures more than 90% of aggregator activity on Solana and offers limit orders, DCA, and perps alongside spot aggregation.

- Raydium—the largest execution-layer DEX, processing nearly $642 billion in spot volume in 2025. Its direct integration with OpenBook's on-chain order book gives it a hybrid AMM/order book model that appeals to market makers.

- Meteora—grew to $254 billion in volume, establishing itself as a major alternative AMM with concentrated liquidity features.

- Orca—recorded $237 billion in volume, known for a clean UX and strong concentrated liquidity pools.

- Prop-AMMs (HumidiFi, SolFi, Tessera)—a new category of proprietary automated market makers that now account for over 80% of aggregator-routed volume. They use dynamic pricing models that create short-lived inefficiencies, driving cyclic arbitrage and reshaping how liquidity is sourced.

The rise of prop-AMMs is arguably the most important structural change in Solana trading since aggregators themselves. Cyclic arbitrage activity jumped from 2.5% of aggregator-executed DEX volume in August 2024 to over 40% by late 2025. For infrastructure builders, this means the speed at which you detect and react to pricing discrepancies across pools is now a primary competitive axis.

The RPC layer: your actual connection to the chain

Everything a trader does on Solana—reading account states, monitoring pools, submitting transactions, tracking confirmations—flows through RPC (Remote Procedure Call) infrastructure. The chain can finalize at 150ms, but if your RPC node is 3 slots behind, you are working with data that's over a second old. Blockhashes expire after 151 slots (roughly 60 seconds), so stale data doesn't just mean missed opportunities—it means rejected transactions.

Here's what matters when evaluating RPC infrastructure for trading:

- Slot lag—the number of slots your RPC trails behind the network tip. Even 2–3 slots of lag (800–1200ms) introduces stale reads and increases transaction rejection rates.

- Transaction landing rate—the percentage of submitted transactions that actually make it into a block. This depends on how your RPC forwards transactions to the current leader validator.

- First-block inclusion—whether your transaction lands in the very next block after submission. Production-grade setups target 80%+ first-block inclusion.

- Throughput under load—shared RPC endpoints degrade during congestion events (token launches, high-volume trading bursts). Multi-tenant clusters throttle all customers when one customer surges.

The distinction between shared and dedicated nodes is critical. Shared endpoints work fine for development and light-load dApps, but trading operations that depend on predictable latency need dedicated, single-tenant infrastructure. This is especially true for market-making bots, MEV searchers, and any strategy where milliseconds translate directly into profit or loss.

For production trading, the minimum viable setup typically includes dedicated RPC nodes running on bare-metal hardware with NVMe SSDs, 512GB+ RAM, and CPU pinning to minimize replay lag. Staked RPC nodes—which carry a validator identity with delegated stake—can inject transactions with stake-based priority into Solana's QUIC pipeline via Stake-Weighted Quality of Service (SWQoS), dramatically reducing transaction drop probability under congestion.

Data streaming: Yellowstone gRPC, Jito ShredStream, and beyond

Polling an RPC node for state changes is the slow way to build trading infrastructure. In 2026, the standard for competitive Solana trading is event-driven data streaming.

Two technologies dominate this space:

Yellowstone gRPC (the Geyser plugin) provides filtered, structured data streams directly from the validator. You subscribe to specific accounts, programs, slots, or transaction types, and receive updates via gRPC with sub-10ms local latency when properly tuned. It's ideal for monitoring liquidity pool states, tracking token balances, feeding trading logic, and powering real-time dashboards. The key advantage is precision—you get only the data you need, avoiding the compute overhead of processing irrelevant transactions.

Jito ShredStream goes one level deeper. Instead of waiting for the validator to fully process and replay blocks, ShredStream delivers raw shreds (the smallest units of block data) directly via gRPC—before validation and replay are complete. In practice, this means receiving transaction data approximately 200–500ms earlier than standard Yellowstone feeds. For MEV searchers and sniper bots, this time advantage can determine whether you capture an opportunity or miss it entirely.

Many competitive trading teams now combine both: ShredStream for earliest possible awareness of new transactions, and Yellowstone for structured, filtered data feeds that power trading logic. The trade-off is complexity—running and maintaining this dual-stream setup requires significant infrastructure expertise.

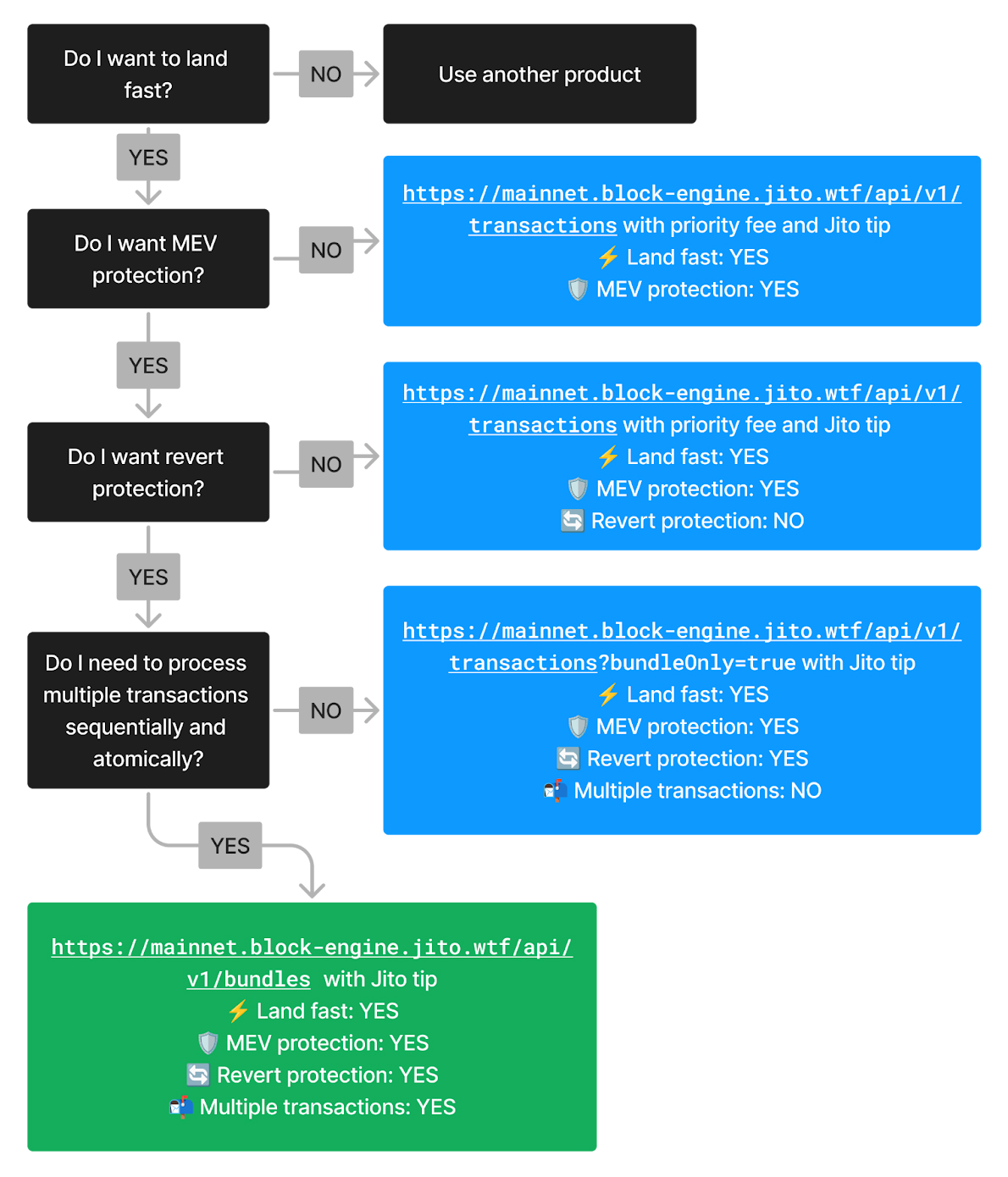

MEV and transaction submission

Maximal Extractable Value (MEV) on Solana is a $720+ million annual market, and the infrastructure around it has become highly sophisticated.

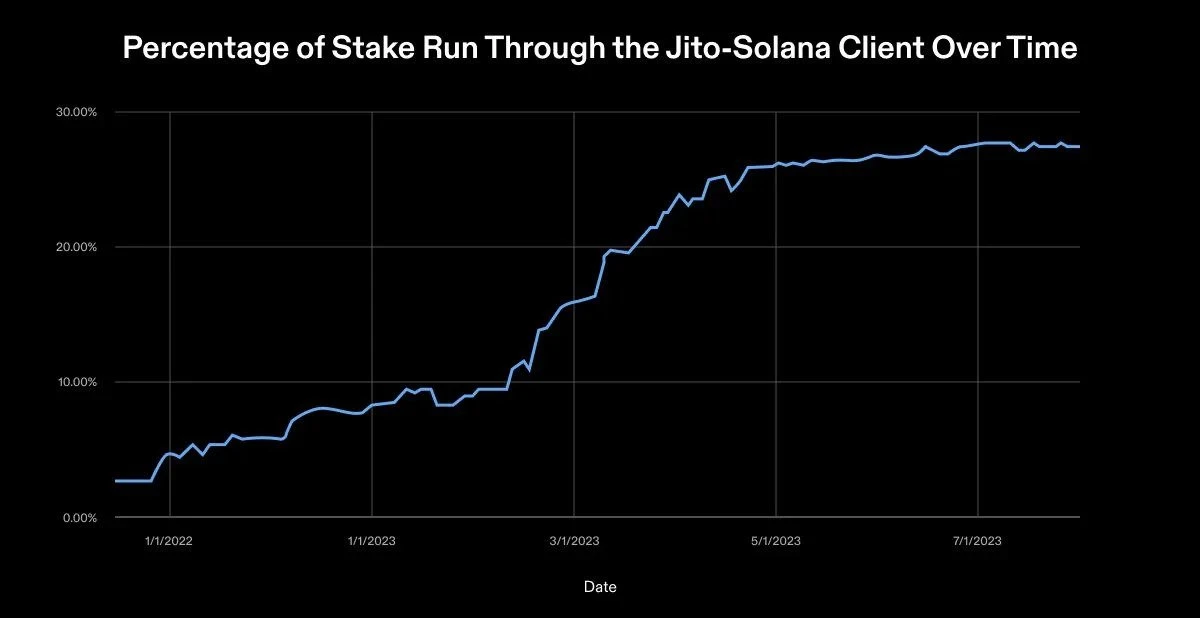

Jito's block engine is the central piece. Validators running Jito-Solana (the modified Agave client) connect to Jito's block engine, which accepts transaction bundles from searchers, bots, and dApps. Bundles are groups of up to 5 transactions executed sequentially and atomically—all or nothing. They include tip bids that get redistributed to validators and stakers, creating an incentive-aligned system for priority inclusion.

Key features of the Jito ecosystem for traders:

- Bundle submission—atomic execution of multi-transaction strategies (arbitrage, liquidations) with revert protection.

- Fast transaction sending—low-latency pathways for individual transactions.

- ShredStream—as described above, the earliest data feed available.

- MEV protection—anti-sandwich mechanisms, including the ability to add "jitodontfront" identifiers to transactions.

Beyond Jito, newer tooling like Yellowstone Shield introduces on-chain allowlists and blocklists for validators. Combined with the Yellowstone Jet TPU Client, traders can route transactions directly to trusted validators via QUIC, bypassing potentially malicious leaders entirely. This is an important development for teams that want MEV-resistant execution without sacrificing speed.

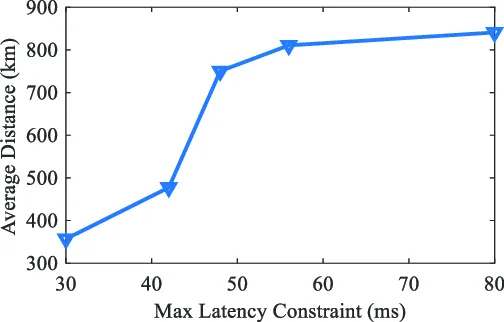

Co-location and physical infrastructure

Solana's 400ms slot time creates a brutal physical constraint: the speed of light matters. A 150ms round-trip delay between your bot and the nearest validator can mean your transaction misses the current slot and waits for the next one. Under congestion, that delay compounds.

Co-location—hosting your trading bot and RPC node in the same physical data center, ideally on the same bare-metal server or within a low-latency LAN segment—eliminates geographic hops, reduces jitter, and minimizes TCP/UDP traversal delays. Production trading setups targeting sub-50ms round-trip times between bot and RPC are the norm for competitive operations.

The most advanced setups place staked RPC nodes with direct validator peering inside the same data center as their trading logic. This enables QUIC-based TPU forwarding with near-zero hop transaction propagation. Combined with Jito bundle submission and ShredStream data feeds, this architecture achieves the tightest possible execution loop on Solana.

Putting the stack together

A competitive Solana trading setup in 2026 typically includes the following layers:

- Dedicated bare-metal RPC node with NVMe storage, high RAM, and CPU pinning—ideally staked for SWQoS priority.

- Jito ShredStream subscription for earliest block data awareness.

- Yellowstone gRPC for filtered, structured data feeds powering trading logic.

- Jito block engine integration for bundle submission with atomic execution and MEV protection.

- Co-located trading bot in the same data center as the RPC node, minimizing network hops.

- Multi-region failover with automatic rerouting to secondary endpoints during outages or latency spikes.

- Monitoring and observability—real-time slot lag tracking, transaction landing rate dashboards, and latency percentile metrics (p50, p95, p99).

This isn't theoretical—it's what trading desks, MEV searchers, and institutional DeFi operations are running today. And it's only going to get more competitive as Alpenglow's sub-200ms finality goes live and Firedancer adoption accelerates across the validator set.

Infrastructure as the real edge

Solana's protocol performance in 2026 is exceptional. But the gap between what the chain can do and what your application actually experiences is determined entirely by your infrastructure stack. The fastest strategy in the world fails if it runs on a lagging RPC, receives data through polling instead of streaming, or submits transactions through shared endpoints during peak congestion.

At RPC Fast, we build Solana infrastructure specifically for this reality. Our bare-metal dedicated nodes are deployed across multiple global regions with Jito ShredStream enabled by default, Yellowstone gRPC streaming, staked RPC paths for SWQoS priority, and automated failover with sub-50ms recovery. Whether you're running HFT bots, market-making operations, or building DeFi protocols that need real-time consistency under load—we provide the infrastructure layer that makes your trading logic actually work at the speed Solana promises.

If your current setup relies on shared endpoints or you're still polling for state changes, you're leaving performance (and profit) on the table. Talk to our team and see what purpose-built Solana trading infrastructure can do for your execution quality.

.svg)

.svg)

.svg)