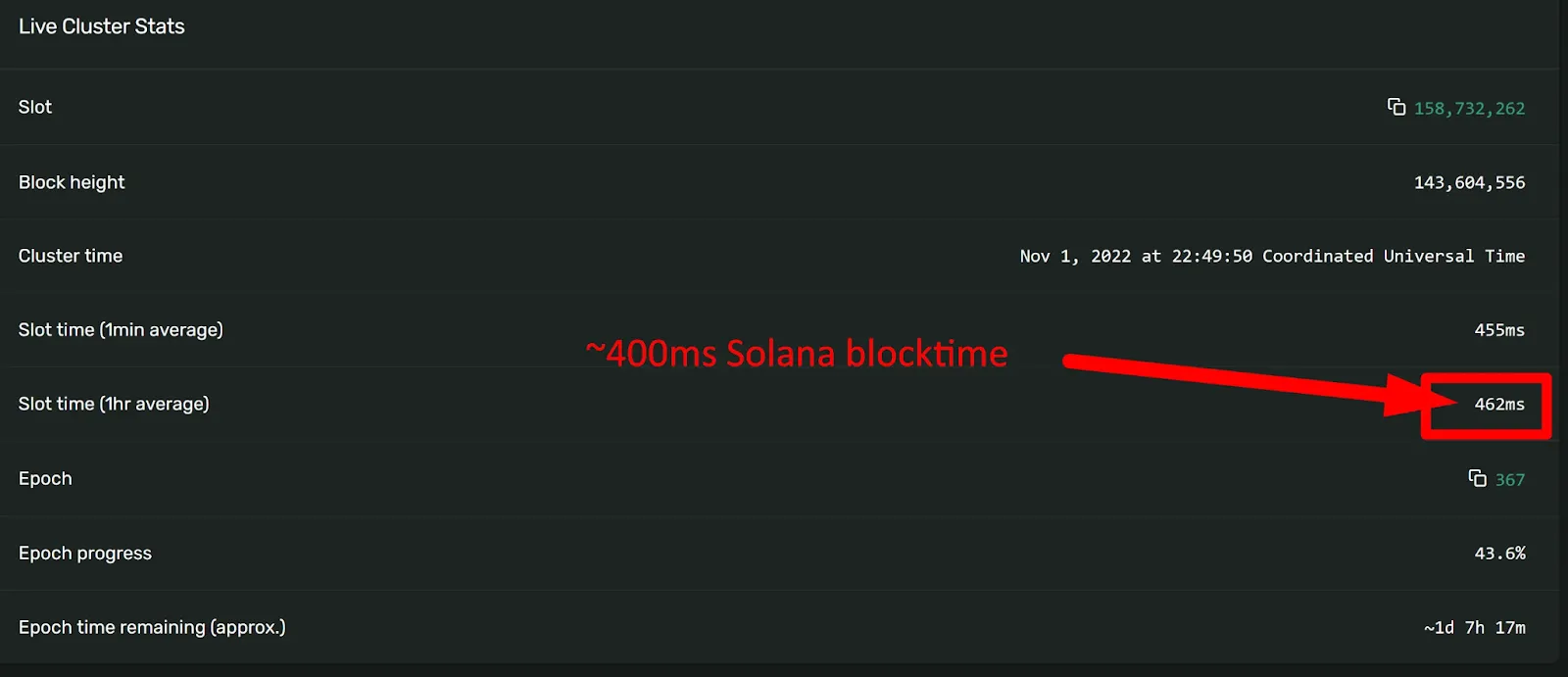

In theory, Solana finalizes a new block every 400 milliseconds, making it one of the fastest public blockchains by design. But in practice, RPC services often trail behind by 2 to 4 slots. That’s enough to miss a confirmation, delay a trade, or stall an interactive app.

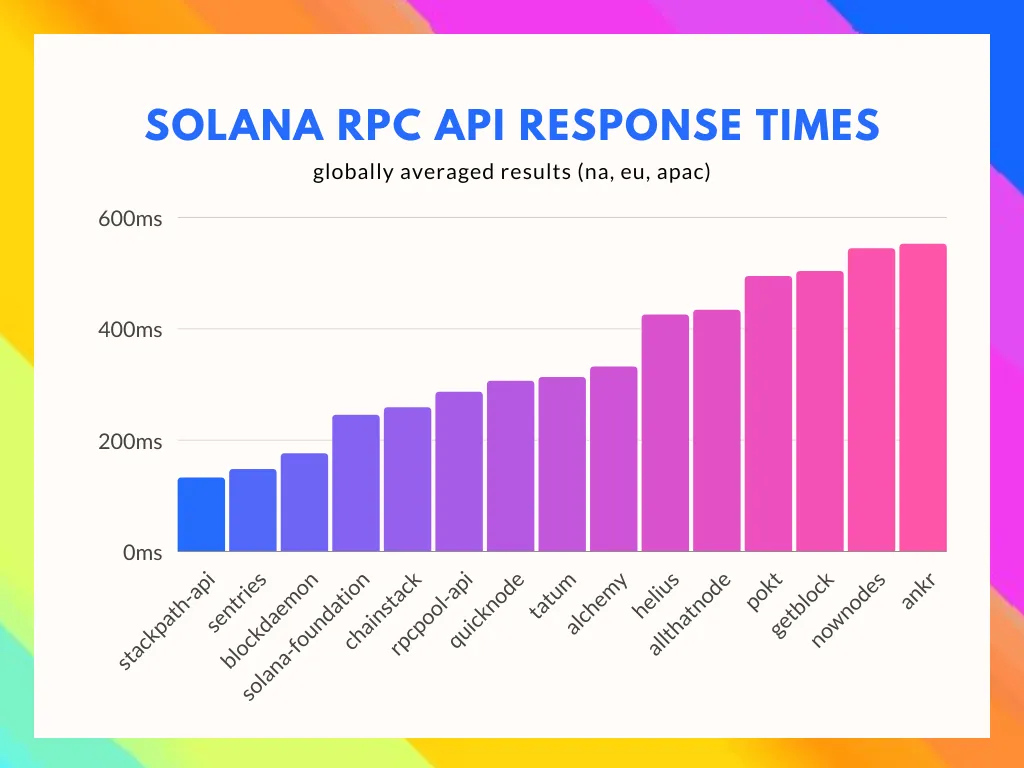

The issue isn’t with the Solana protocol itself. It’s with how most RPC providers handle performance under real network conditions. Marketing pages boast sub-100ms latencies and 99.9% uptime. But during congestion—high-frequency trading bursts, or validator churn—those promises break. Clients receive stale slot data, 429 errors, or silent drops in sendTransaction() calls.

For builders of time-sensitive Solana applications, from DeFi to gaming to MEV bots, the consequences are serious: missed execution windows, broken product logic, and degraded user experience.

This article breaks down what “real-time” should actually mean in Solana RPC—how to measure it, why it’s hard to deliver, and what infrastructure designs can close the gap.

The real problem behind “real-time” claims

Most Solana RPC providers deliver fast responses until they don’t. The issue isn’t everyday latency. It’s how infrastructure breaks under pressure, without warning or recovery.

During low-traffic conditions, shared RPC endpoints often return account data or transaction status in under 100ms. But when Solana hits throughput peaks—like NFT launches, validator restarts, or MEV-induced spikes—latency balloons and consistency degrades.

Even well-engineered dApps experience:

- Slot lag: RPC responses trail the current leader slot by 2–5 blocks. This delay makes it impossible to accurately simulate or pre-confirm transactions.

- Dropped transactions: sendTransaction() calls silently fail or time out due to rate-limited queues or under-prioritized relaying.

- Stale reads: Calls to getAccountInfo, getBlockHeight, or getProgramAccounts return outdated data, especially on high-churn accounts like DEX order books.

- Error 429 storms: Shared RPCs throttle bursts even under modest app volume—breaking wallet UIs, game logic, or trading bots in production.

These issues don’t just affect the long tail of apps. We’ve seen them impact:

- On-chain games, where frame logic is tied to block state

- NFT mint sites, where accurate slot timing and mempool feedback are critical

- Trading protocols and bots, where stale block height means missed arbitrage

- Wallets, where slow confirmations break trust and flow

The deeper problem? Most of these RPC failures happen without observable symptoms. You don’t get crash logs or 500s. You get stale data that looks correct and builds user-facing bugs that are hard to trace.

In a 2024 incident during a high-volume mint, several projects reported >3 slot lag and >1s RPC response latency across multiple providers—while Solana itself remained fully live and performant.

This is the core trust gap: Solana may be fast. But your access to it depends entirely on how your RPC infrastructure behaves under real-world pressure.

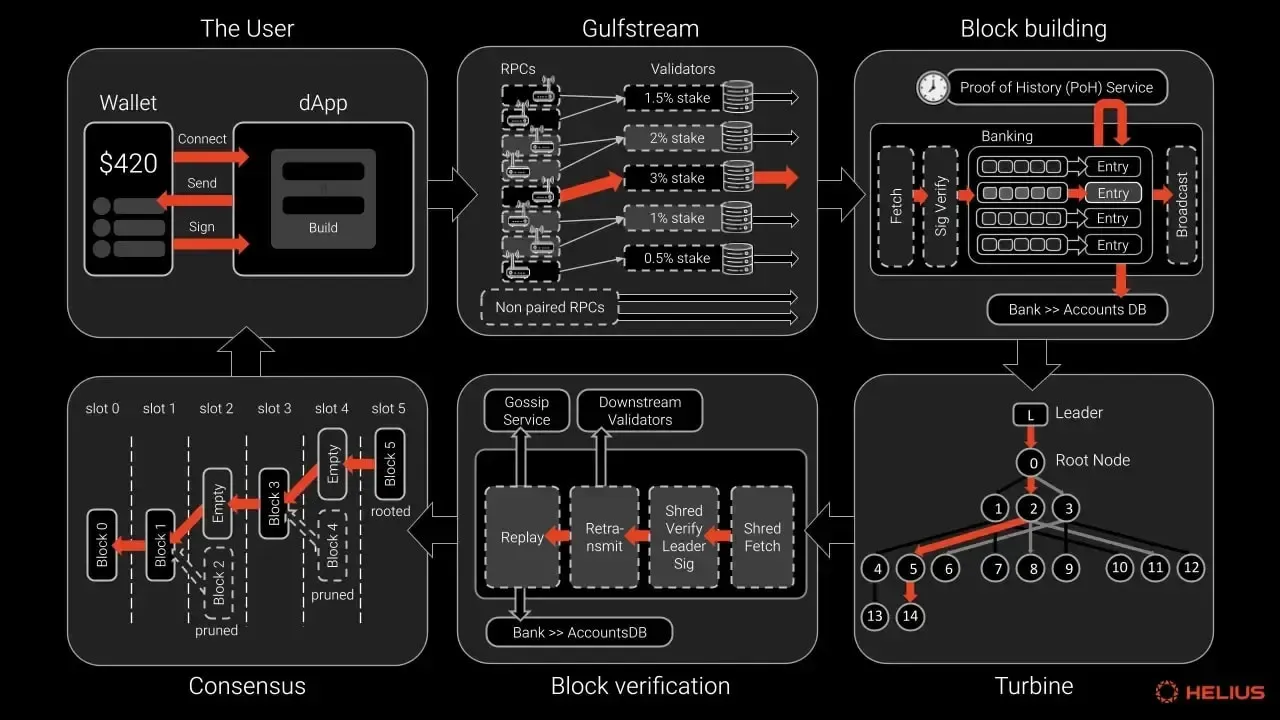

Common bottlenecks in Solana RPC architecture

While most Solana RPC providers advertise fast response times, few explain the technical tradeoffs behind their infrastructure. The real gap in “real-time” RPC emerges from architectural bottlenecks that only show up under load—and that most providers silently inherit by default.

1. Shared clusters and overloaded nodes

Many providers run shared RPC clusters with multi-tenant usage. While this model is cost-effective, it introduces congestion under even moderate bursts. Once CPU or bandwidth is saturated, Solana’s pipelined validator runtime gets throttled at the RPC layer, not the chain. This leads to:

- Delayed slot updates

- 429 Too Many Requests errors

- Inconsistent getProgramAccounts or simulateTransaction results

A 2024 performance report from Helius noted that shared RPC endpoints under peak load could trail leader slots by 3–5 blocks, impacting pre-confirmation logic and real-time reads.

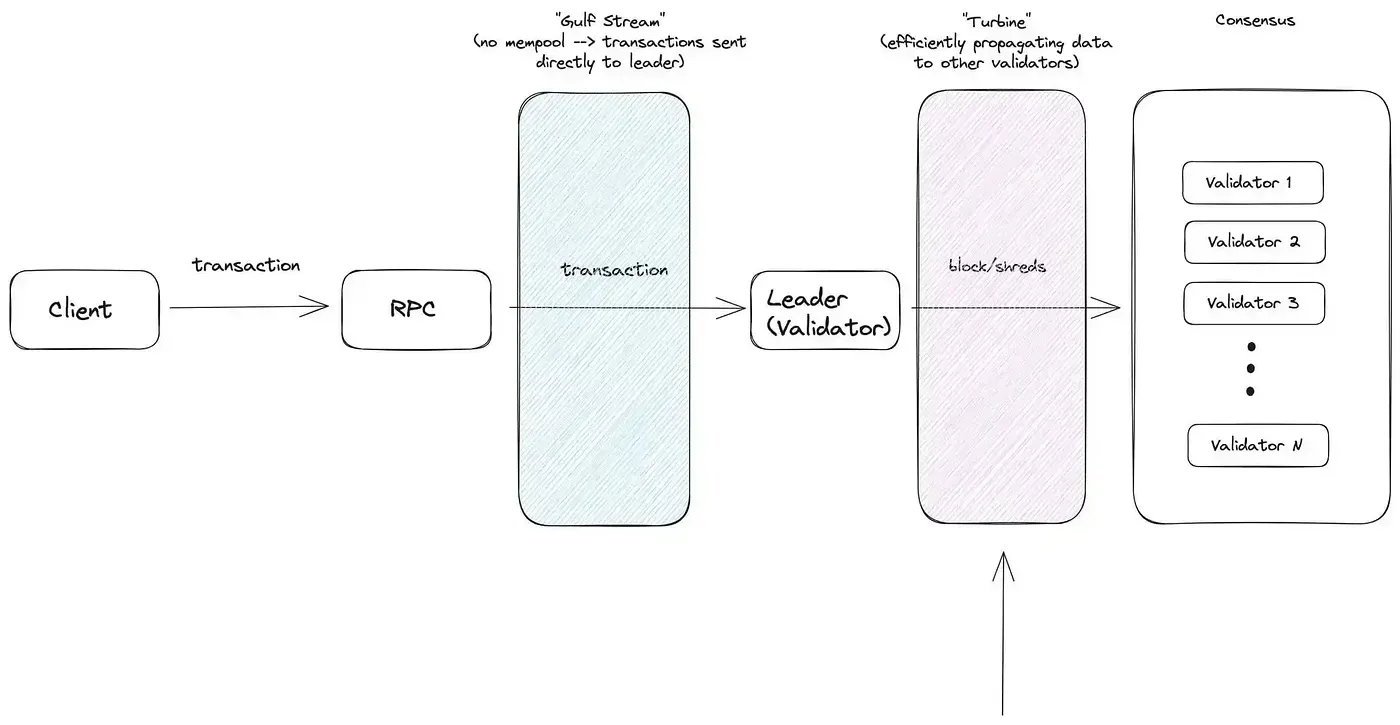

2. Absence of validator-co-located RPC

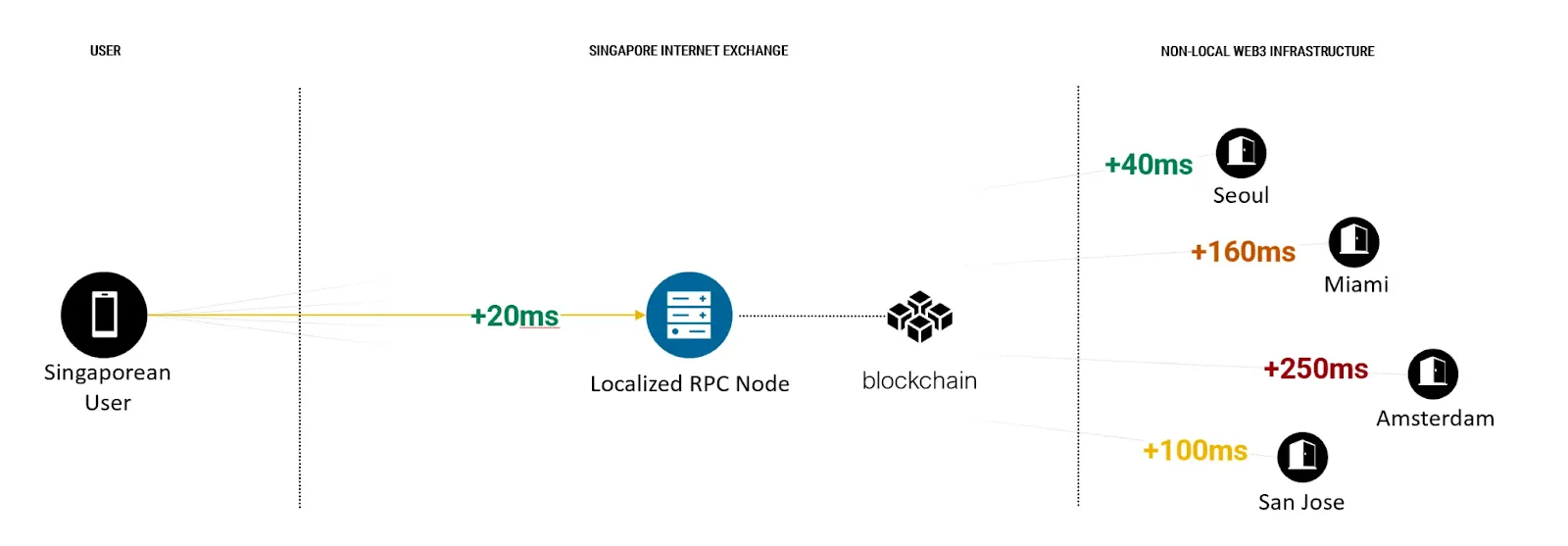

Most RPC providers operate outside of direct validator environments. Without local ledger access or slot leader sync, their endpoints can’t serve hot data. This increases slot desync risk and makes simulated transactions misaligned with actual fork state. According to Jito Labs, running RPCs without validator access leads to 50–80ms extra delay per call—a fatal margin in MEV or trading apps (Jito MEV Technical Overview).

3. No priority relaying or MEV bundle support

Standard Solana RPC uses sendTransaction() over HTTP, without slot-timed relaying. Under congestion, these transactions often miss the active leader window. Jito-integrated relayers or staked RPC setups with priority access solve this—but are still rare in most commercial RPC stacks. Without this layer, apps lose write reliability under pressure.

4. Lack of gRPC or WebSocket streaming

Polling APIs (HTTP-based get* methods) introduce artificial latency. While Solana natively supports gRPC and WebSocket subscriptions, many providers do not expose or optimize them. That leaves apps blind to slot transitions or account changes in real time—critical for games, trading UIs, or reactive apps.

5. Weak slot tracking and fork awareness

When RPC nodes rely solely on finalized data (32-slot confirmation), they sacrifice responsiveness. But operating on optimistic or pre-confirmed forks requires tight slot voting integration—which is often missing. As a result, developers encounter:

- Broken UX (e.g. transaction shows sent, but doesn’t land)

- Conflicting balances

- Errors in simulation vs actual execution

These issues are structural. They can’t be patched by CDNs or caching layers—only architectural redesign solves them.

How to architect real-time Solana RPC infrastructure

Delivering real-time performance on Solana isn’t about hitting a single latency metric. It requires building infrastructure that keeps pace with the chain’s 400ms slot production, propagates data near-instantly, and guarantees transaction inclusion even under peak congestion.

Slot-level synchronization is non-negotiable

A real-time RPC node must ingest, replay, and serve data nearly in lockstep with the cluster head. Any RPC node lagging by 2–3 slots (i.e. 800–1200ms) introduces stale reads and risks invalidating transactions due to expired blockhashes (which time out in 60–90 seconds).

To meet this requirement:

- Nodes must use fast disk I/O (e.g. NVMe SSDs), 512GB+ RAM, and CPU pinning to reduce replay lag.

- QUIC-based TPU forwarding or direct validator peering is essential to submit TXs within slot windows.

- Slot alignment must be monitored continuously; even slight desync during high-throughput events can cause missed trades or failed minting transactions.

Write throughput is the real bottleneck

Solana’s design pushes bottlenecks to the edge. Most developers optimize for fast reads, but write-path performance is the gating factor for real-time apps. During events like airdrops or arbitrage bursts, transactions must land in the current or next leader slot.

Key techniques:

- Use stake-weighted QoS (SWQoS) and staked TPU relays to guarantee transaction prioritization under congestion (Helius, 2023).

- Integrate Jito’s ShredStream or bloXroute’s Trader API for bundle forwarding and near-instant execution (bloxroute, 2024).

- Queue management and retry logic must optimize for Solana’s short-lived blockhash validity window.

Caching and indexing for low-latency reads

Account reads (e.g. getBalance, getAccountInfo) can cause significant lag if they hit disk or lock RocksDB.

High-performance RPC setups use:

- In-RAM caching for hot account and token data (via Redis or embedded memory stores)

- Prebuilt indexes for getProgramAccounts or historical lookups

- Slot-aware invalidation and write-through logic to ensure consistency

These techniques let most queries complete in <5ms even during spikes.

gRPC streaming beats polling

Polling (via REST or JSON-RPC) introduces latency and overhead. Real-time dApps now use gRPC streams fed by Solana’s Yellowstone Geyser plugin. This allows clients to receive:

- Slot changes

- Account updates

- Transaction confirmations

…as soon as the validator processes them, without needing to re-query. For example, a DEX UI can update in under 100ms on-chain state change via gRPC.

Load balancing and failover must be smart

Simple round-robin isn’t sufficient. Real-time RPC must:

- Route read vs write traffic to dedicated backends

- Monitor slot lag and auto-evict stale nodes from load balancer rotation

- Use geo-aware routing to serve clients from the lowest-latency region

- Maintain warm spares to reduce recovery time from hours to <15 minutes

The real-time stack in practice

These aren’t theoreticals. As described in our recent case study, RPC Fast implemented all the above for a high-frequency trading team handling 30M+ requests/day:

- Bare-metal Solana nodes with NVMe, 1TB RAM, and active-active clustering

- TPU forwarding with stake-weighted relay

- Jito integration for priority block inclusion

- gRPC streaming via Yellowstone Geyser

- Real-time observability and failure recovery within 10s

The outcome: near-100% uptime, sub-10ms latency, and zero TX dropouts under pressure.

Your path to real-time RPC on Solana

Delivering real-time RPC performance on Solana requires more than advertised millisecond latency. It requires end-to-end control of infrastructure, optimized data pathways, and deterministic transaction propagation. In practice, less than 10% of providers maintain slot lag under 1 during peak congestion or support gRPC-native data streams for low-latency reads—despite claiming real-time capabilities.

RPC Fast recently deployed such infrastructure for a quant trading client handling over 30 million daily RPC calls, the majority being getTransaction and getSignaturesForAddress. Their in-house nodes suffered from inconsistent latency, delayed slot propagation, and regular desyncs under load. Our deployment eliminated these issues by:

- Implementing a dual-node HA cluster with active-active failover

- Shifting data acquisition from REST polling to Yellowstone gRPC streaming

- Integrating Jito ShredStream and bloXroute Solana Trading API

- Using a pre-synced bare-metal node pool for 15-minute cold start recovery

The result: >99.99% uptime, measurable latency reduction across all methods, and complete removal of dropped response errors. The system also achieved an 83% first-block inclusion rate, verified through slot-aligned execution tracking.

For infrastructure engineers, the distinction is clear: marketing numbers alone don’t ensure consistency under load. Real-time RPC must withstand validator churn, indexing pressure, and market-level transaction spikes—often above 500 req/s sustained. Without features like deterministic request routing, sub-slot leader alignment, and message streaming under 6ms intervals, systems degrade silently under pressure.

The gap between stated SLAs and actual delivery widens as projects scale. This performance-expectation mismatch remains one of the biggest blockers for mission-critical Solana applications in 2025.

RPC Fast addresses that gap by delivering real, testable, auditable RPC infrastructure. If your protocol, exchange, or analytics product depends on fresh Solana state, predictable block writes, and zero packet loss, you need more than an endpoint. You need a platform engineered to deliver measurable outcomes.

.svg)

.svg)

.svg)